Research

Dental Simulator

Project members: M.Sc. Maximilian Kaluschke, Prof. Dr. Gabriel Zachmann

In cooperation with the Mahidol University we are developing a simulation environment for dental surgery, such as caries removal and root canal access opening.

We are simulating realistic colliding physical behaviour when the drilling bur and tooth come in contact. Additionally, we are simulating the material removal process of the tooth when it is drilled. We model the tooth with three layers of hardness (enamel, dentin and pulp).

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de, mxkl at uni-bremen dot de

|

|

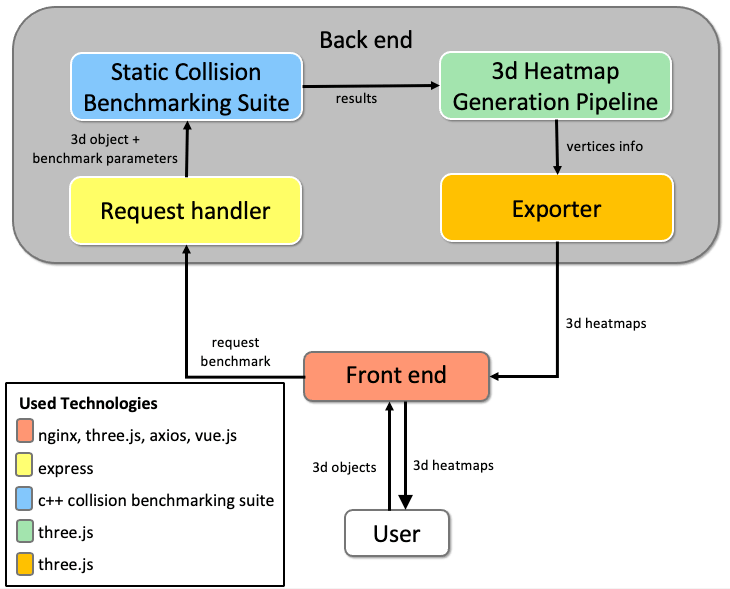

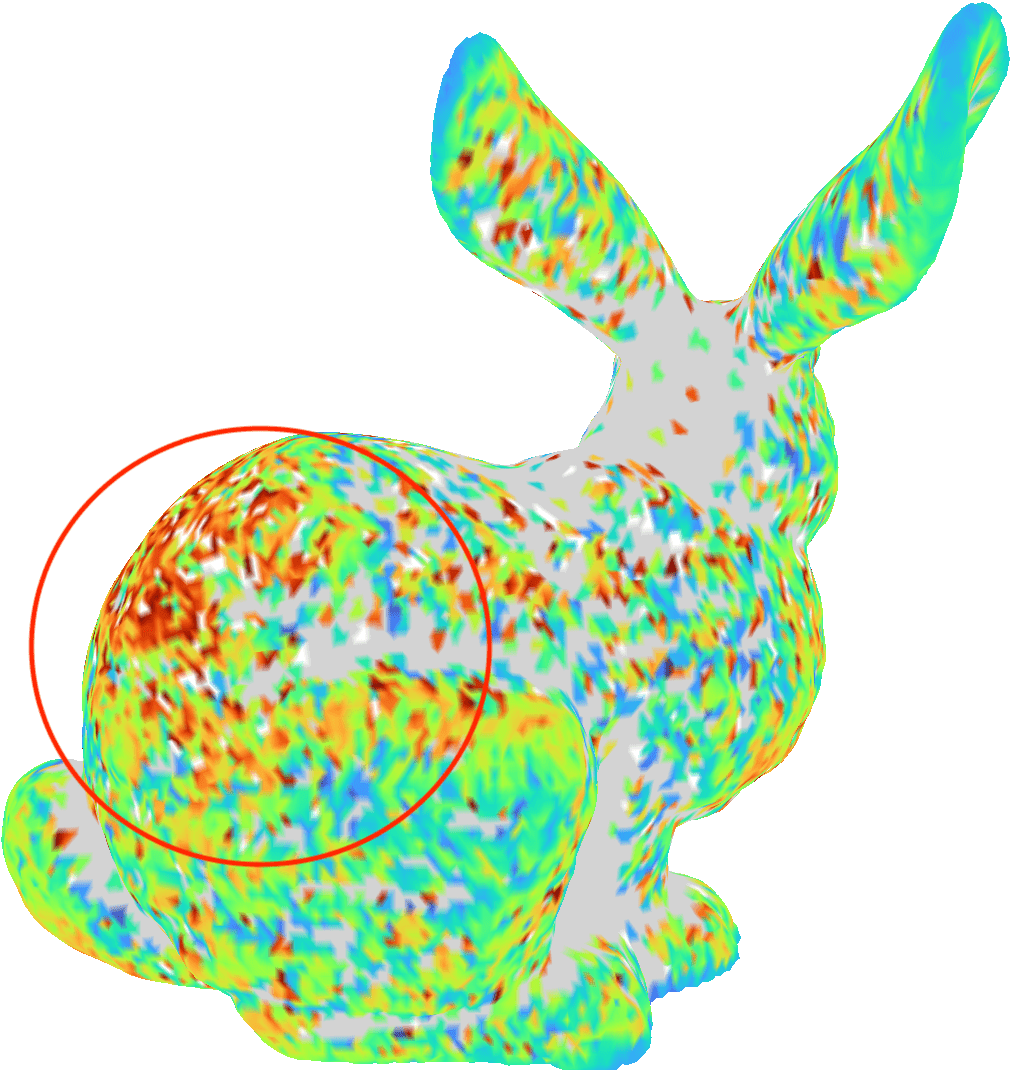

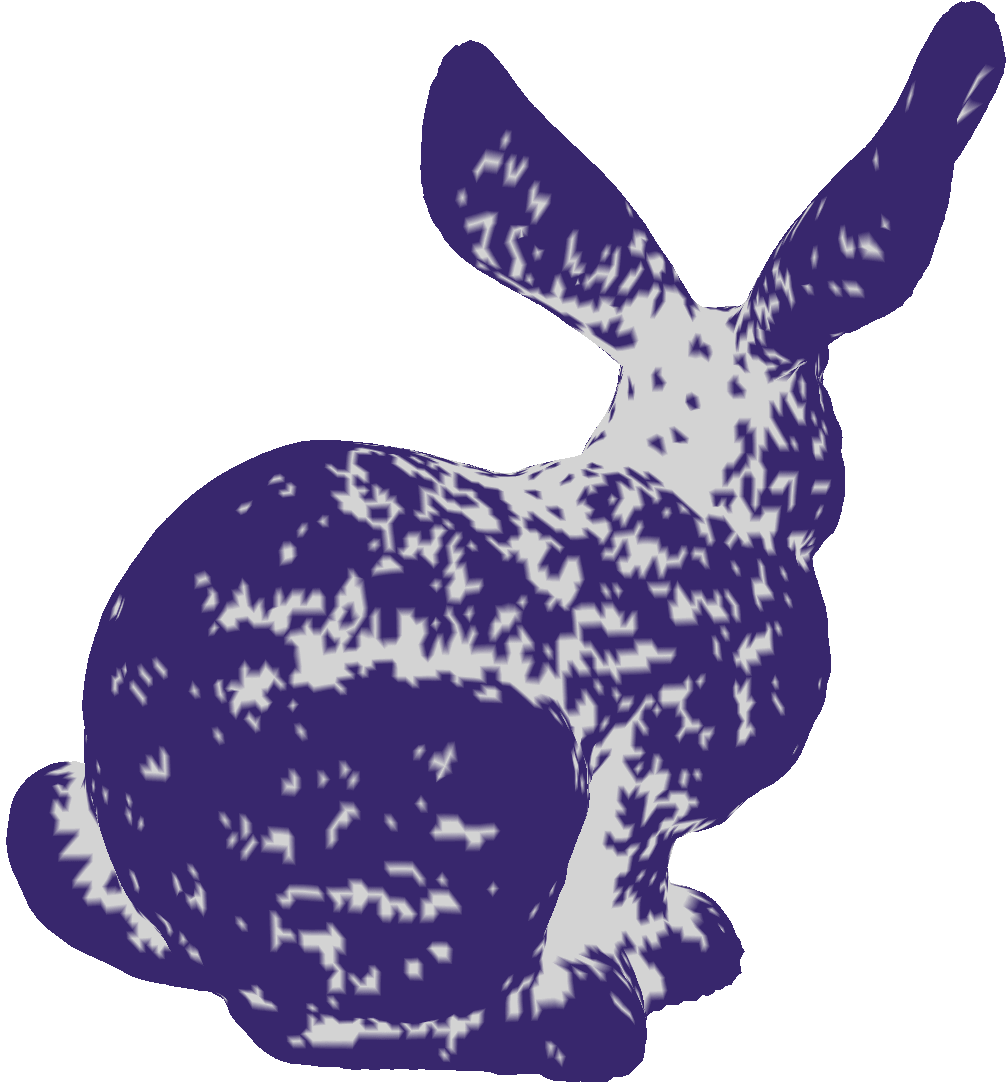

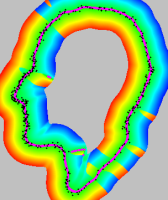

OpenCollBench - Benchmarking of Collision Detection & Proximity Queries as a Web-Service

Project member: M.Sc. Toni Tan, Dr. Rene Weller, Prof. Dr. Gabriel Zachmann

We present a server-based benchmark that enables a fair analysis of different collision detection & proximity query algorithms. A simple yet interactive web interface allows both expert and non-expert users to easily evaluate different collision detection algorithms' performance in standardized or optionally user-definable scenarios and identify possible bottlenecks. In contrast to typically used simple charts or histograms to show the results, we additionally propose a heatmap visualization directly on the benchmarked objects that allows the identification of critical regions on a sub-object level.

An anonymous login system, in combination with a server-side scheduling algorithm, guarantees security as well as the reproducibility and comparability of the results. This makes our benchmark useful for end-users who want to choose the optimal collision detection method or optimize their objects with respect to collision detection but also for researchers who want to compare their new algorithms with existing solutions.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

DopTree

|

BoxTree

|

SIMDop

|

|

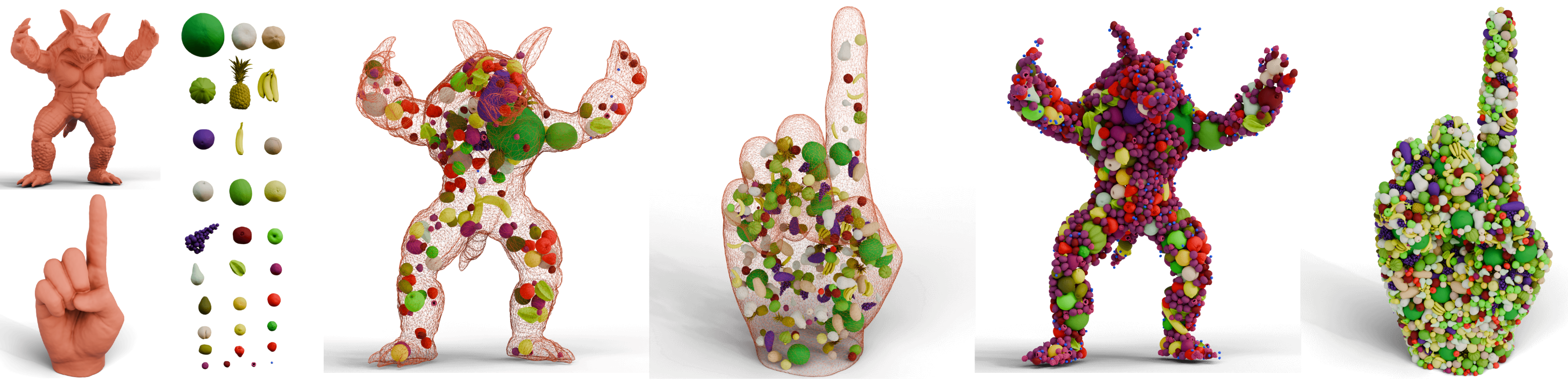

Multi-Objective 3D Packing into Arbitrary Containers

Project member: Prof. Dr. Gabriel Zachmann, Dr. Rene Weller, M.Sc. Hermann Meißenhelter

In this project we developed a software, to place arbitrary objects densely inside of a single container in three-dimensional space. Besides packing density, there are additional optimization criterias like percentual object type distribution and avoiding spatial clustering of same object types. For further information please visit our project homepage

E-Mail: zach at cs.uni-bremen.de

|

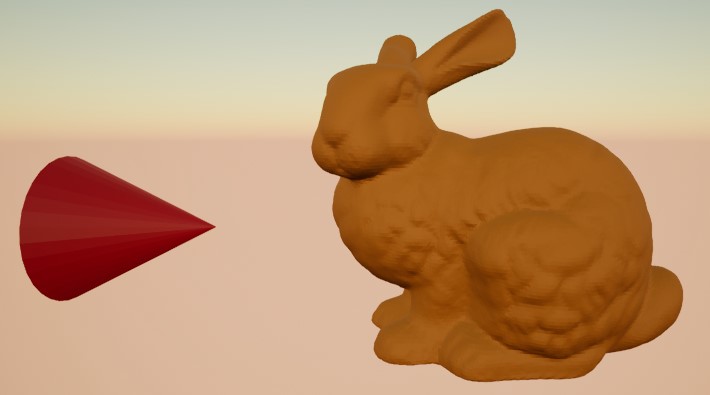

UnrealHaptics

Project members: Prof. Dr. Gabriel Zachmann, M.Sc. Hermann Meißenhelter, M.Sc. Janis Roßkamp

UnrealHaptics is a plugin-architecture that enables advanced virtual reality (VR) interactions, such as haptics or grasping in modern game engines.

The core is a combination of a state-of-the-art collision detection library with support for very fast and stable force and torque computations and a general device plugin for communication with different input/output hardware devices, such as haptic devices or Cybergloves.

For further information please visit our project homepage

E-Mail: zach at cs.uni-bremen.de

|

SmartOT

Project members: Prof. Dr. Gabriel Zachmann, M.Sc. Andre Mühlenbrock

SmartOT is a joint project of the University of Bremen, research- and clinical partners and several companies, which is financed by the german Federal Ministry of Education and Research (BMBF - Bundesministerium für Bildung und Forschung).

The goal is to develop an automatic lighting system and an intelligent control concept for operating theaters. Based on the ASuLa project, we provide the software to automatically control the light modules to create an uniform illumination of the site and avoid shadow casts by surgeons and assistants.

For further information please visit our project homepage

E-Mail: zach at cs.uni-bremen.de

|

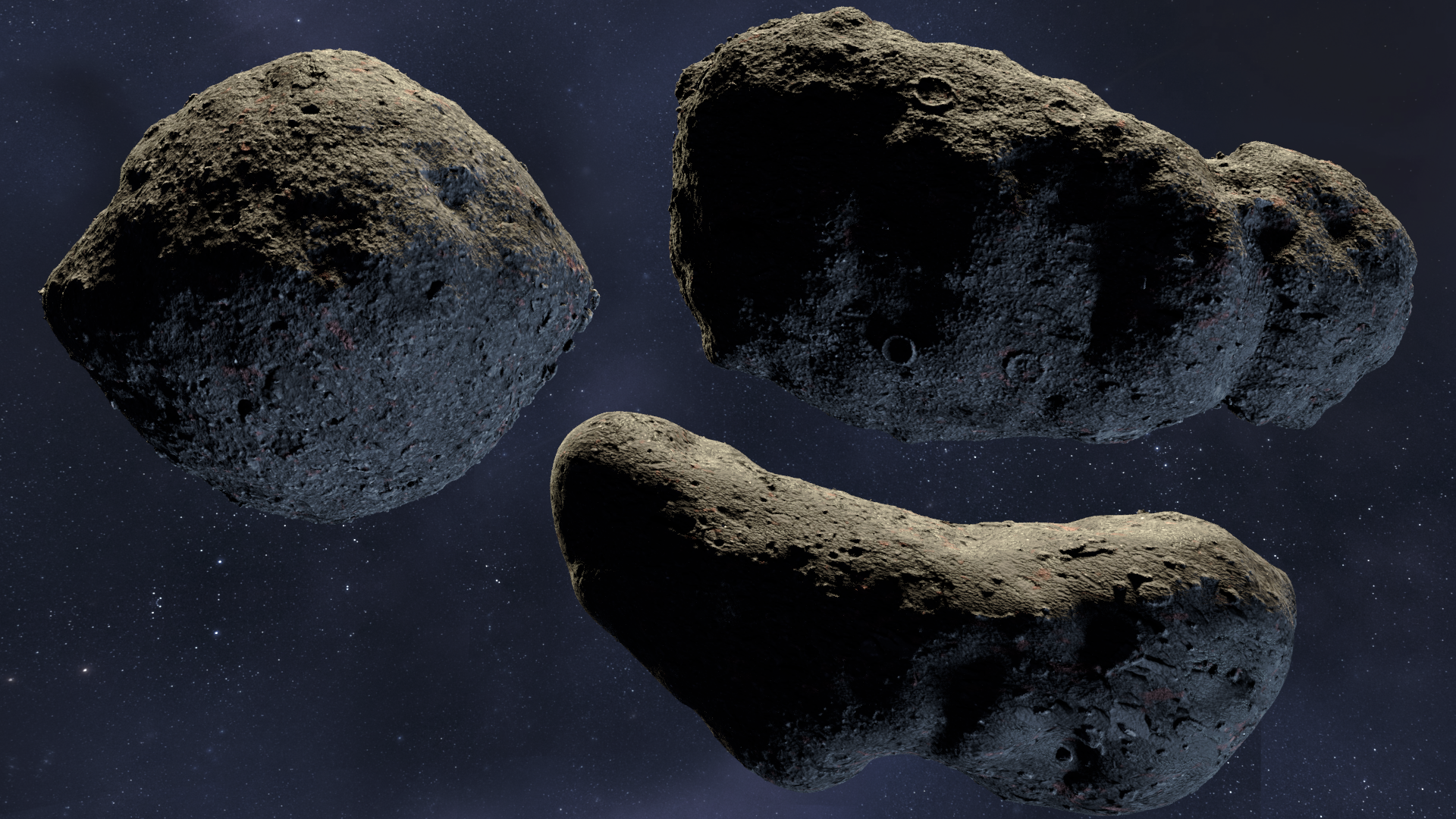

Procedural Asteroid

Project members: Prof. Dr. Gabriel Zachmann, M.Sc. Xizhi Li

3D Asteroid model plays an important role as the virtual testbed in space mission simulation.

In this project, we propose a method to synthesis diverse asteroid with a few intuitive parameters.

For further information please visit our project homepage

E-Mail: zach at cs.uni-bremen.de

|

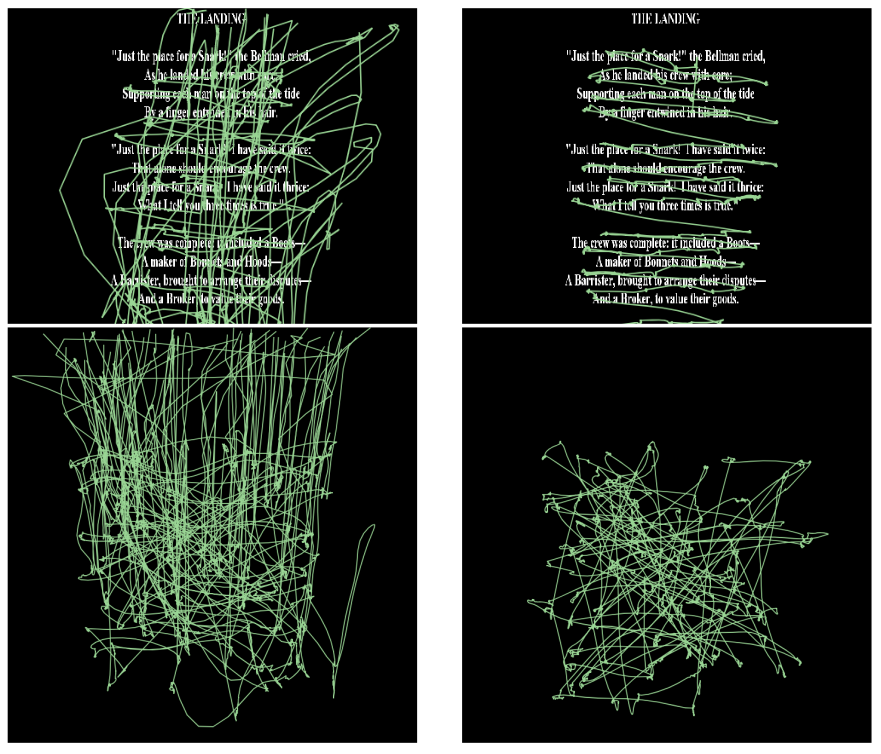

Gaze Biometrics

Project members: Prof. Dr. Gabriel Zachmann, M.Sc. Christoph Schröder, M.Sc. Sahar Mahdie Klim, M.Sc. Martin Prinzler, Prof. Dr. Sebastian Maneth

Human eye movement characteristics can be used for user authentication. In this project, we analyze eye-tracking data to identify individual users.

This project is a collaboration between the Institute for Computer Graphics and Virtual Reality and AG Datenbanken.

For further information please visit our project homepage

E-Mail: zach at cs.uni-bremen.de

|

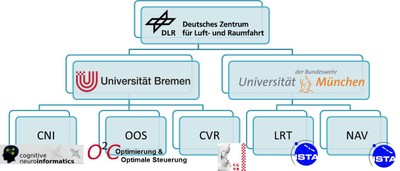

VaMEx-VTB: Virtual Test Bed for Swarm-based Exploration of the Valles Marineris on the Planet Mars

Project member: Prof. Dr. Gabriel Zachmann, M.Sc. Jörn Tueber, Phillip Dittmann and Abhishek Srinivas

VaMEx-VTB is a joint venture of the University of Bremen and several other German universities financed by the German Aerospace Centre (DLR - Deutsches Zentrum für Luft- und Raumfahrt). Initiative develops a VTB for testing concepts, algorithms and hardware for several subprojects of the VaMEx project for swarm-based exploration of the Valles Marineris on the planet Mars. For further information please visit our project homepage

E-Mail: zach at cs.uni-bremen.de

|

|

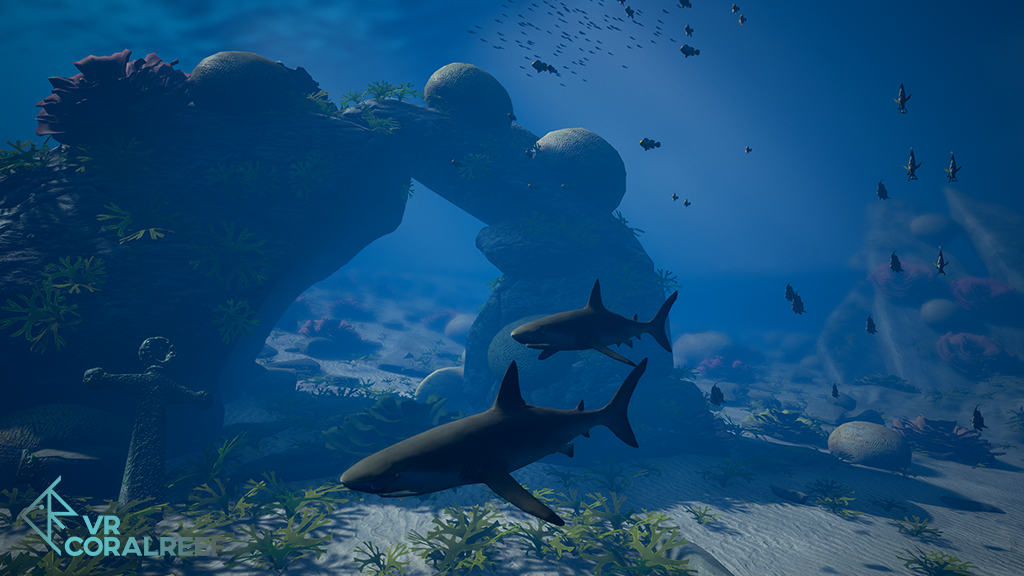

Virtual Coral Reef

Project members: Dr. Rene Weller, Prof. Dr. Gabriel Zachmann

The Virtual Coral Reef is a ecological simulation which was developed in coorperation with the Leibniz Centre for Tropical Marine Research.

One of the many features are the simulated swarms of fish and dynamic corals growing or dying which can be experienced on a Powerwall and through HMDs.

For further information please visit our project homepage

E-Mail: zach at cs.uni-bremen.de

|

|

|

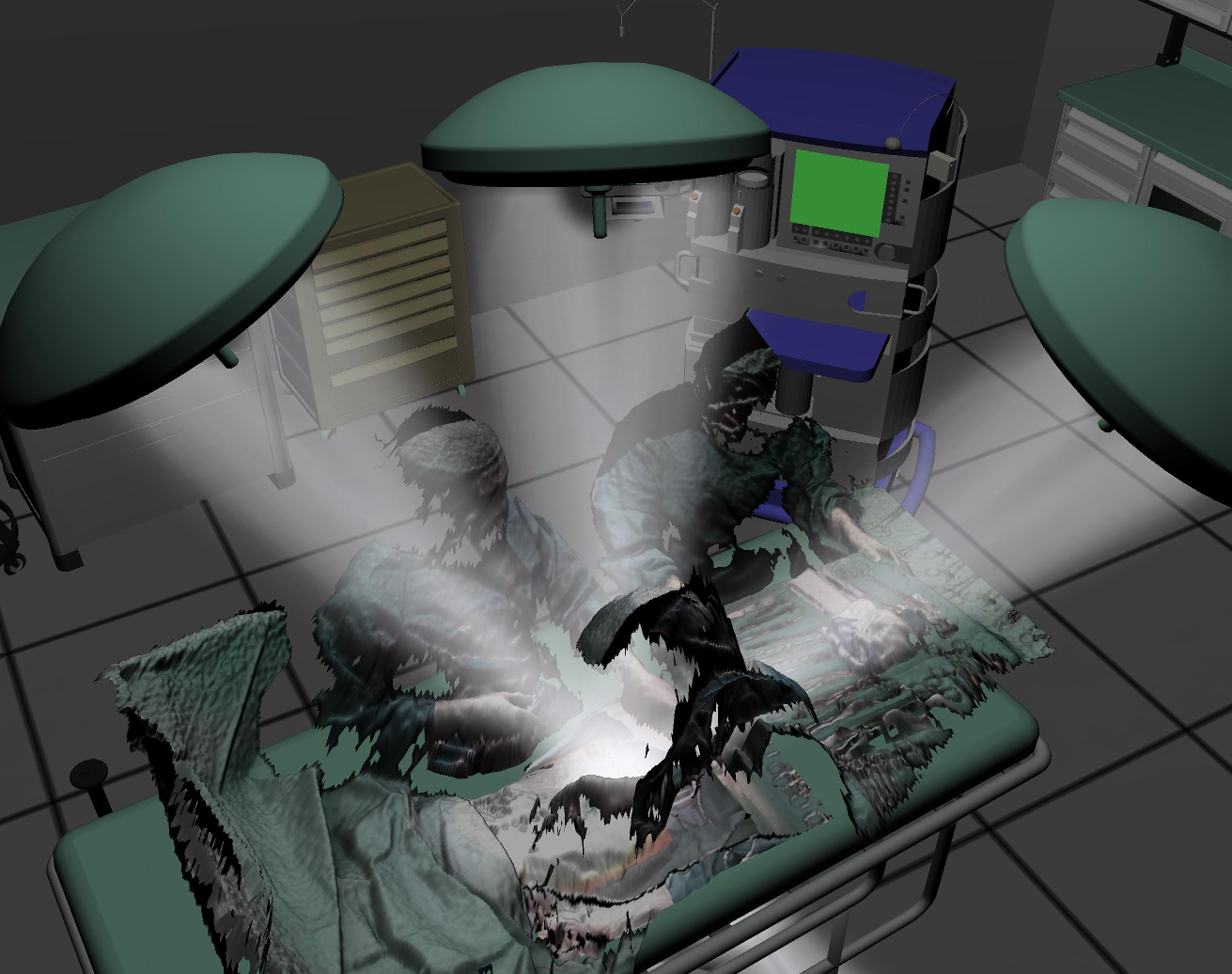

VIVATOP: Versatile Immersive Virtual and Augmented Tangible Operating Room

Project members: Prof. Dr. Gabriel Zachmann, M.Sc. Roland Fischer

VIVATOP is a joint project of the University of Bremen, research- and clinical partners and several companies, which is financed by the german Federal Ministry of Education and Research (BMBF - Bundesministerium für Bildung und Forschung).

The goal of VIVATOP is to use a combination of modern Virtual Reality (VR) and Augmented Reality (AR) techniques as well as 3D printing to support and enhance the training, planning and execution of surgeries.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

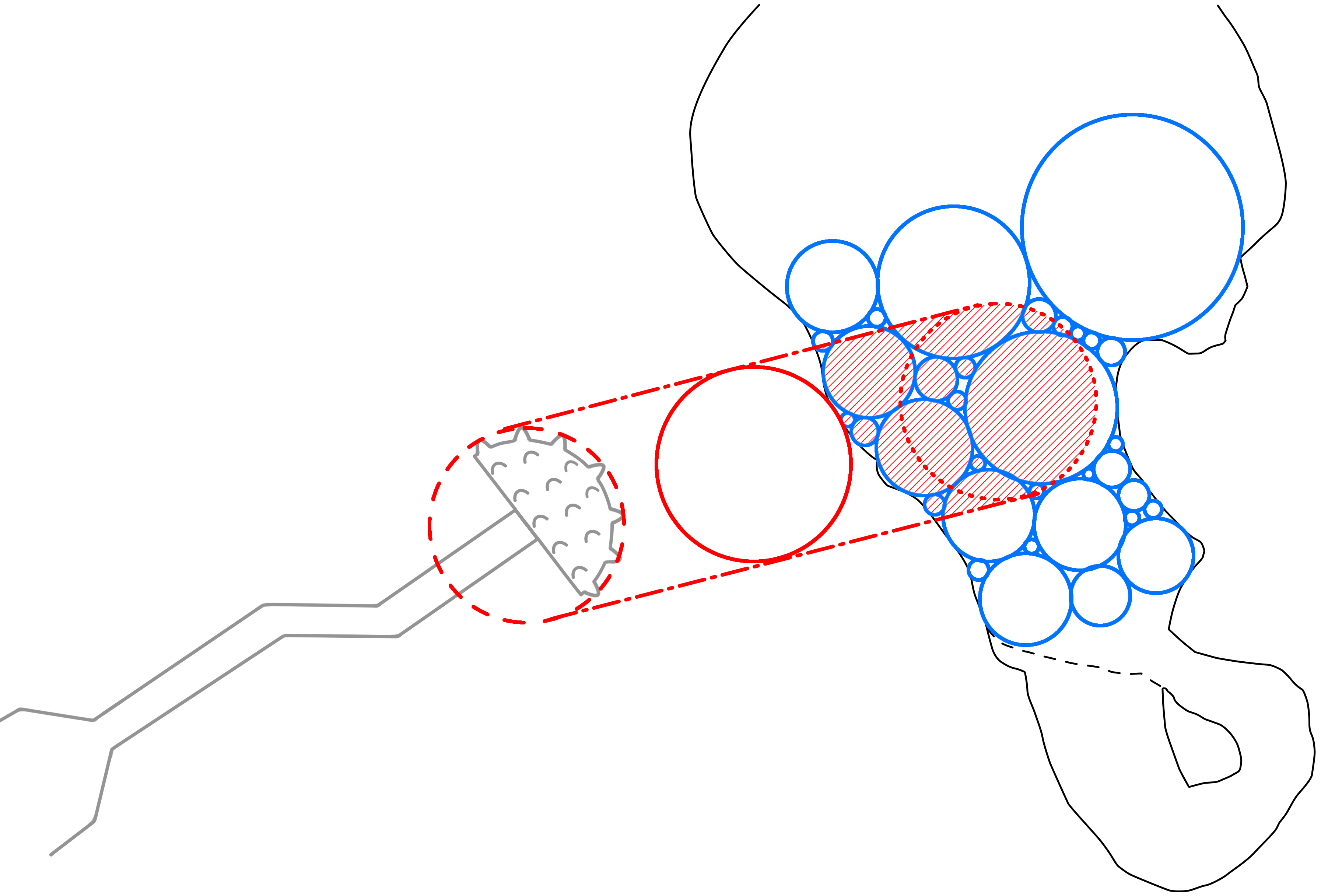

HIPS: Hip Prosthesis Implantation Simulator

Project members: M.Sc. Maximilian Kaluschke, Prof. Dr. Gabriel Zachmann

In cooperation with other German universities and medium-sized companies we are developing a simulation environment for hip replacement surgery.

Our task in this project involves simulating realistic colliding physical behaviour when bone-mill and hip bone come in contact. Additionally, we are simulating the milling process of the bone material when it is drilled.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de, mxkl at uni-bremen dot de

|

|

DynCam: A Reactive Multithreaded Pipeline Library for 3D Telepresence in VR

Project members: Prof. Dr. Gabriel Zachmann, M.Sc. Christoph Schröder, M.Sc. Roland Fischer

DynCam is a platform-independent and modular library for real-time, low latency streaming of point clouds, focusing on telepresence in VR.

With our library color and depth information of several distributed RGB-D cameras are processed, combined and streamed over the network. This processing is organized as a pipeline that supports implicit multithreading by using a reactive programming approach. The resulting single point cloud can be rendered on multiple clients in real time. One important focus is the fast compression of the point cloud data.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

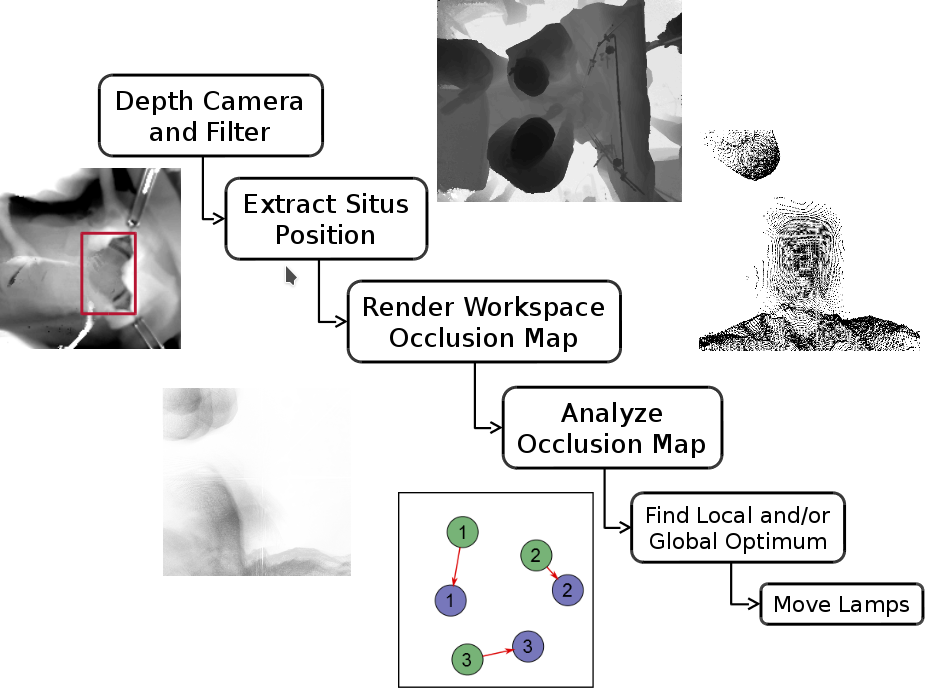

Autonomous Surgical Lamps

Project member: Prof. Dr. Gabriel Zachmann, M.Sc. Jörn Teuber

As part of the Creative Unit - Intra-Operative Information, we are developing algorithms for the autonomous positioning of surgical lamps in open surgery.

The basic idea is to take the point cloud given by the depth camera and render it from the perspective of the situs towards the working space of the lamps above the operating table. Based on this rendering, optimal positions for a given set of lamps are computed and applied.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

|

KaNaRiA: Kognitionsbasierte, autonome Navigation am Beispiel des Ressourcenabbaus im All

Project member: Prof. Dr. Gabriel Zachmann, M.Sc. Patrick Lange, M.Sc. Abhishek Srinivas

KaNaRiA (from its German acronym: Kognitionsbasierte, autonome Navigation am Beispiel des Ressourcenabbaus im All) is a joint venture of the University of Bremen and the Universität der Bundeswehr in Munich financed by the German Aerospace Centre (DLR - Deutsches Zentrum für Luft- und Raumfahrt). For further information please visit our project homepage

E-Mail: zach at cs.uni-bremen.de

|

|

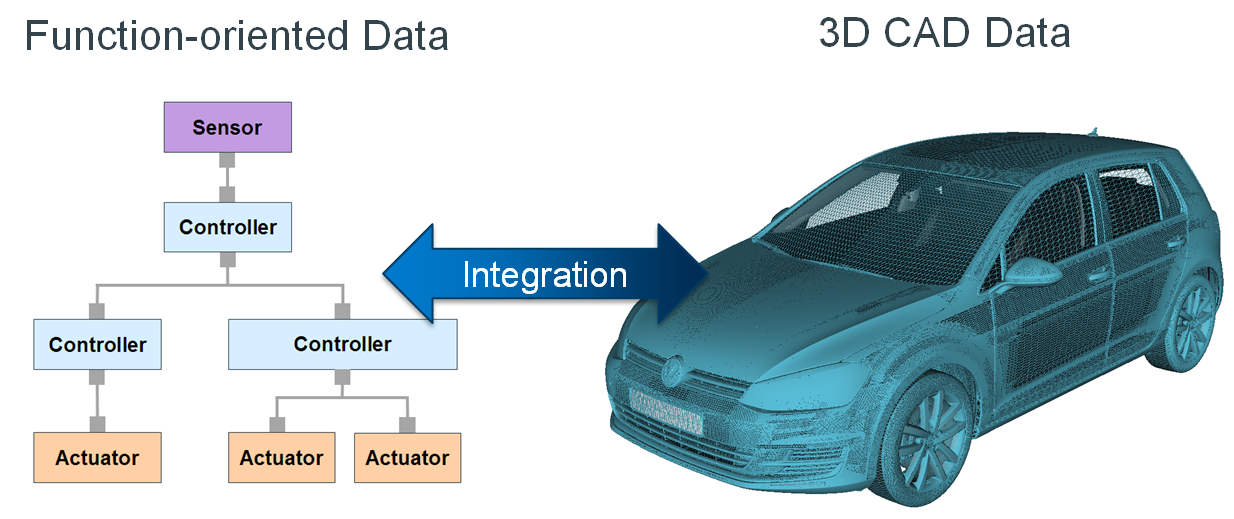

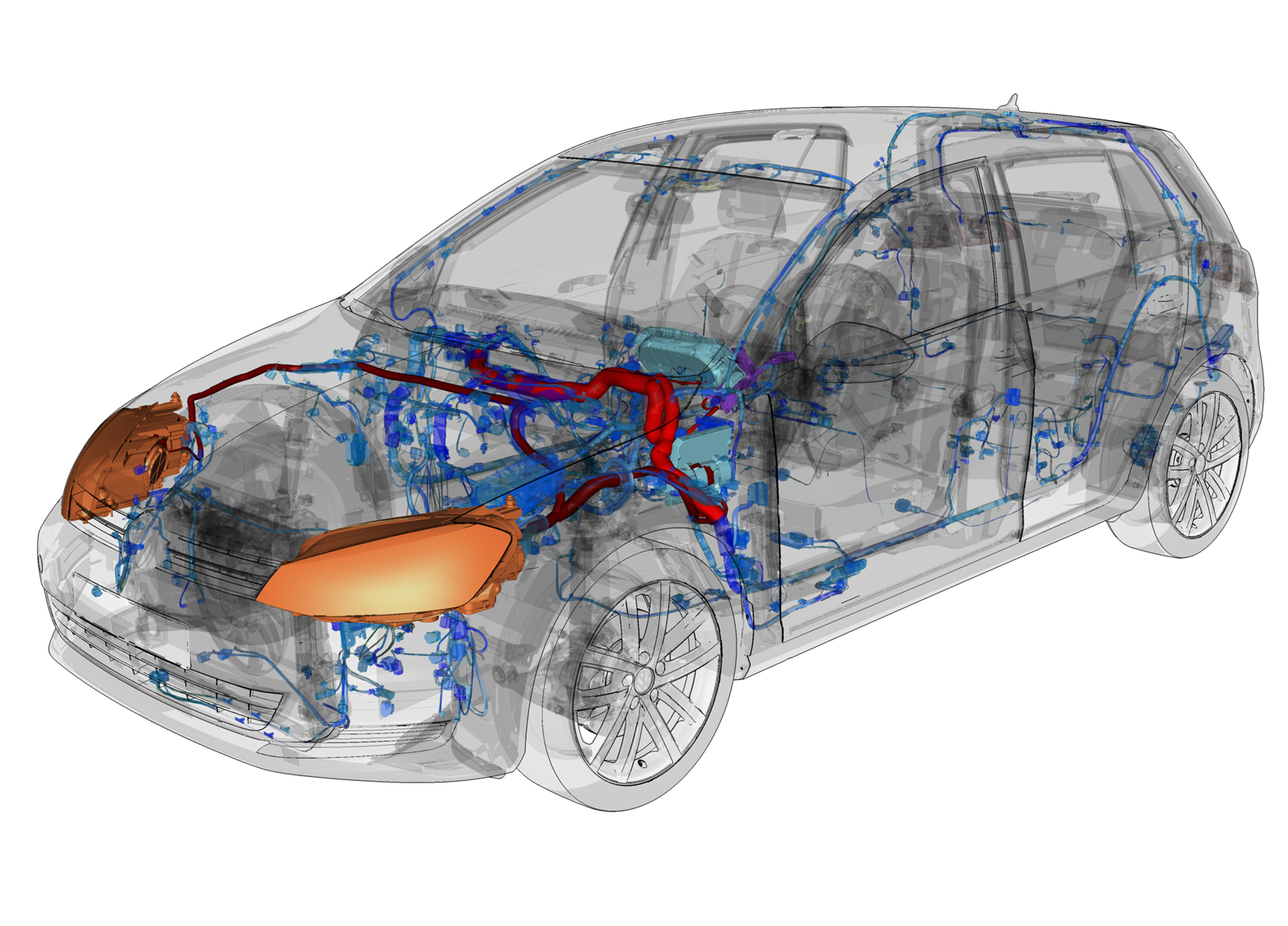

New Methodologies for Automotive PLM by combining Function-oriented Development with 3D CAD and Virtual Reality

Project member: Prof. Dr. Gabriel Zachmann, Dipl.-Inf. Moritz Cohrs

In the automotive industry, a function-oriented development approach extends the traditional component-oriented development by focusing an interdisciplinary development of vehicle functions as mechatronic and cyber-physical systems and it is an important measure to master the high and further increasing product complexity. In addition, technologies like virtual reality, computer-aided design (CAD) and virtual prototyping offer largely established tools for the automotive industry in order to handle different challenges across product lifecycle management. So far, however, the promising potentials of 3D virtual reality methods have not yet been evaluated in the context of an automotive function-oriented development. Therefore, this research focuses on the development of a novel function-oriented 3D methodology in order to significantly improve and streamline relevant workflows within a function-oriented development. Therefore, a consistent integration of function-oriented data with 3D CAD data is realized with a prototypical implementation and a novel function-oriented 3d methodology is developed. The benefits of the new methodology are assessed within multiple automotive use cases and further evaluated with user studies.

For further information please visit our project homepage.

E-Mail: moritz.cohrs at volkswagen.de

|

|

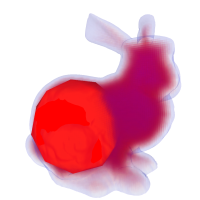

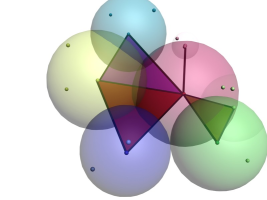

Protosphere - A GPU-Assisted Prototype Guided Sphere Packing Algorithm for Arbitrary Objects

Project member: Prof. Dr. Gabriel Zachmann, Dr. Rene Weller

We present a new algorithm that is able to efficiently compute a space filling sphere packing for arbitrary objects. It is independent of the object's representation and can be easily extended to higher dimensions.

The basic idea is very simple and related to prototype based approaches known from machine learning. This approach directly leads to a parallel algorithm that we have implemented using CUDA. As a byproduct, our algorithm yields an approximation of the object's medial axis.

This project is partially funded by BMBF grant Avilus / 01 IM 08 001 U.

For further information please visit our project homepage.

Reference:

E-Mail: zach at cs.uni-bremen.de

|

|

|

Haptesha - A Collaborative Multi-User Haptic Workspace

Project member: Prof. Dr. Gabriel Zachmann, Dr. Rene Weller

Haptesha is a haptic workspace that allows high fidelity two-handed multi-user interactions in scenarios containing a large number of dynamically simulated rigid objects and a polygon count that is only limited by the capabilities of the graphics card.

This project is partially funded by BMBF grant Avilus / 01 IM 08 001 U.

For further information please visit our project homepage.

Awards: Winner of RTT Emerging Technology Contest 2010

E-Mail: zach at cs.uni-bremen.de

|

|

Real-time camera-based 3D hand tracking

Project member: Prof. Dr. Gabriel Zachmann, Dr. Daniel Mohr

Tracking a user's hand can be a great alternative to common interfaces for human-computer interaction. The goal of this project is to observe the hand with cameras. The captured images deliver the information needed to determine the hand position and state. Because of measurement noise, occlusion in the captured images, and real-time constraints, hand-tracking is a scientific challenge.

Our approach is model-based, utilizing multiple cameras to reduce uncertainty. In order to achieve real-time hand-tracking, we will try to reduce the very high complexity of the hand model, which has about 27 degrees of freedom.

This project is partially funded by DFG grant ZA292/1-1.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

|

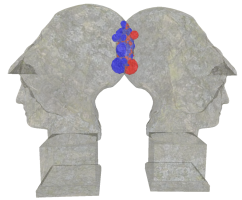

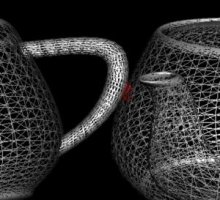

Inner Sphere Trees

Project member: Prof. Dr. Gabriel Zachmann, Dr. Rene Weller

Collision detection between rigid objects plays an important role in many fields of robotics and computer graphics, e.g. for path-planning, haptics, physically-based simulations, and medical applications. Today, there exist a wide variety of freely available collision detection libraries and nearly all of them are able to work at interactive rates, even for very complex objects

Most collision detection algorithms dealing with rigid objects use some kind of bounding volume hierarchy (BVH). The main idea behind a BVH is to subdivide the primitives of an object hierarchically until there are only single primitives left at the leaves. BVHs guarantee very fast responses at query time, as long as no further information than the set of colliding polygons is required for the collision response. However, most applications require much more information in order to solve or avoid the collisions.

One way to do this is to is to compute repelling forces based on the penetration depth. However, there is no universally accepted definition of the penetration depth between a pair of polygonal models. Mostly, the minimum translation vector to separate the objects is used, but this may lead to discontinuous forces.

Moreover, haptic rendering requires update rates of at least 200 Hz, but preferably 1 kHz to guarantee a stable force feedback. Consequently, the collision detection time should never exceed 5 msec.

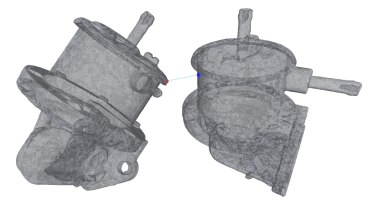

We present a novel geometric data structure for approximate collision detection at haptic rates between rigid objects. Our data structure, which we call inner sphere trees, supports both proximity queries and the penetration volume; the latter is related to the water displacement of the overlapping region and, thus, corresponds to a physically motivated force. Our method is able to compute continuous contact forces and torques that enable a stable rendering of 6-DOF penalty-based distributed contacts.

The main idea of our new data structure is to bound the object from the inside with a bounding volume hierarchy, which can be built based on dense sphere packings. The results show performance at haptic rates both for proximity and penetration volume queries for models consisting of hundreds of thousands of polygons.

This project is partially funded by DFG grant ZA292/1-1 and BMBF grant Avilus / 01 IM 08 001 U.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

|

|

|

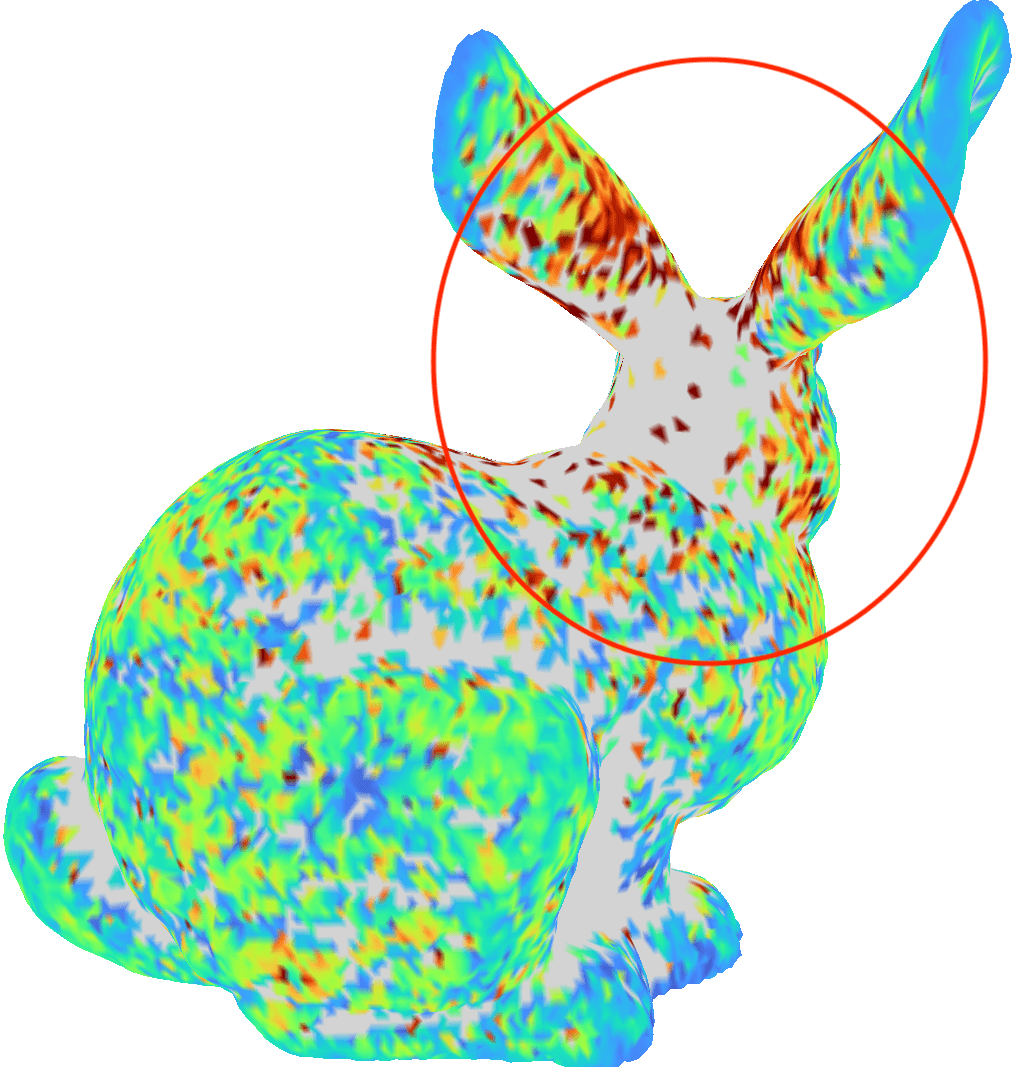

Open-Source Benchmarking Suite for Collision Detection Libraries

Project member: Prof. Dr. Gabriel Zachmann, Dr. Rene Weller

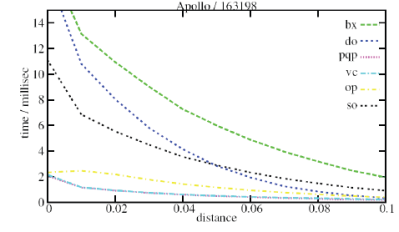

Fast algorithms for collision detection between polygonal objects are needed in many fields of computer science. In nearly all of these applications, collision detection is the computational bottleneck. In order to gain a maximum speed of applications, it is essential to select the best suited algorithm.

The design of a standardized benchmarking suite for collision detection would make fair comparisons between algorithms much easier. Such a benchmark must be designed with care, so that it includes a broad spectrum of different and interesting contact scenarios. However, there are no standard benchmarks available to compare different algorithms. As a result, it is nontrivial to compare two algorithms and their implementations. In this project, we developed a simple benchmark procedure which eliminates these effects. It has been kept very simple so that other researchers can easily reproduce the results and compare their algorithms.

Our benchmarking suite is flexible, robust, and it is easy to integrate other collision detection libraries. Moreover, the benchmarking suite is freely available and can be downloaded here together with a set of objects in different resolutions that cover a wide range of possible scenarios for collision detection algorithms, and a set of precomputed test points for these objects.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

|

Open-Source Collision Detection Library

Project member: Prof. Dr. Gabriel Zachmann, Dr. Rene Weller

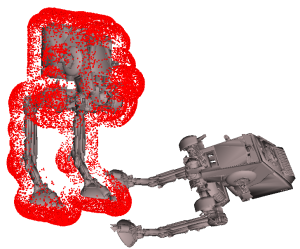

Fast and exact collision detection between a pair of graphical objects undergoing rigid motions is at the core of many simulation and planning algorithms in computer graphics and related areas (for instance, automatic path finding, or tolerance checking). In particular, virtual reality applications such as virtual prototyping or haptic rendering need exact collision detection at interactive speed for very complex, arbitrary ``polygon soups''. It is also a fundamental problem of dynamic simulation of rigid bodies, simulation of natural interaction with objects, haptic rendering, path planning, and CAD/CAM.

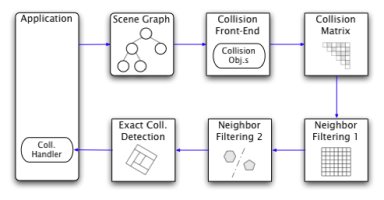

In order to provide an easy-to-use library for other researchers and open-source projects, we have implemented our algorithms in an object-oriented library, which is based on OpenSG. It is structured as a pipeline, contains algorithms for the broad phase (grid, convex hull test, separating planes), and the narrow phase (Dop-Tree, BoxTree, etc.).

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

|

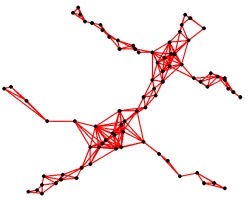

Point-based Object Representation

Project member: Prof. Dr. Gabriel Zachmann

Partners: This project was conducted in cooperation with the Algorithmen und Komplexität group at Paderborn University, headed by Prof. Friedhelm Meyer auf der Heide

In the past few years, point clouds have had a renaissance caused by the wide-spread availability of 3D scanning technology. In order to render and interact with objects thus represented, one must define an appropriate surface (even if it is not explicitly reconstructed). This definition should produce a surface as close to the original surface as possible while being robust against noise (introduced by the scanning proc ess). At the same time, it should allow to render and interact with the object as fast as possible.

In this project we have worked on such a definition. It builds on an implicit function defined using weighted least squares (WLS) regression and geoetric proximity graphs. This yields several improvements such as adaptive kernel bandwidth, automatic handling of holes, and feature-preserving smoothing.

We also investigate methods that can quickly identify intersections of objects represented in such a way. This is, again, greatly facilitated by utilizing graph algorithms on the proximity graphs.

E-Mail: zach at cs.uni-bremen.de

|

|

|

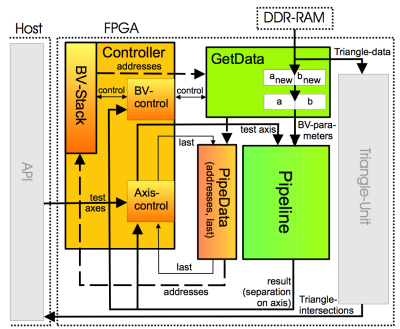

Special-Purpose Hardware for Collision Detection

Project member: Prof. Dr. Gabriel Zachmann

Partners: Technische Informatik group at Bonn University, headed by Prof. Joachim Anlauf.

Collision detection is one of the most time-consuming tasks in all kinds of physical modeling and rendering. Among them are, to name just a few, virtual prototyping, haptic rendering, games, animation systems, automatic path finding, remote control of vehicles (tele-presence), medical training, and medical planning systems.

All of these areas pose high demands on collision detection: it should be real-time under all circumstances, and it must be able to handle large numbers of objects and large numbers of polygons. For some applications, such as physically-based simulation or haptic rendering, the performance of the collision detection should be much higher than the rendering itself (for haptics, a cycle time of 1000 Hz must be achieved).

From the very beginning, special purpose hardware has always been designed for rendering computer graphics. This makes sense because of two reasons. First, the system can render graphics while it is already doing the computations for the next frame. Second, the process of rendering itself can be broken down into lots of small sub-tasks.

Currently however, as we all know, the computational power of graphics cards (i.e., rendering power) increases faster than Moore's Law, while the computing power of the general purpose CPU increases ``only'' by Moore's Law. This leads to a situation where interactive graphics applications, such as VR systems, computer games, CAD, or medical simulations, can render much more complex models than they can simulate.

Motivated by the above findings, the overall objective of this project is the development of specialized hardware for PC-based VR and entertainment systems to deliver real-time collision detection for large-scale and complex environments. This in turn will allow for real-time physically-based behavior of complex rigid bodies in large-scale scenarios.

In addition, the infrastructure for integrating the chip into a standard PC will be developed, which includes development of drivers, API, monitoring tools, and libraries. Finally, several representative sample applications will be developed demonstrating the performance gain of the newly developed hardware. These will encompass applications from virtual prototyping as well as the gaming and the computer animation industry.

This project is partially funded by DFG grant ZA292/2-1.

For further information please visit our project homepage.

E-Mail: zach at cs.uni-bremen.de

|

|

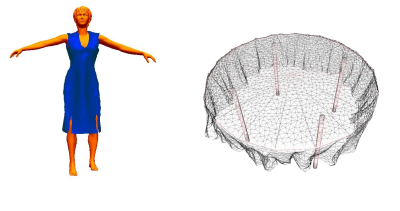

Kinetic Bounding Volume Hierarchies for Deformable Objects

Project member: Prof. Dr. Gabriel Zachmann, Dr. Rene Weller

Bounding volume hierarchies for geometric objects are widely employed in many areas of computer science to accelerate geometric queries, e.g., in computer graphics for ray-tracing, occlusion culling and collision detection. Usually, a bounding volume hierarchy is constructed in a pre-processing step which is suitable as long as the objects are rigid. However, deformable objects play an important role, e.g., for creating virtual environments in medical applications or cloth simulation. If such an object deforms, the pre-processed hierarchy becomes invalid.

In order to still use this method for deforming objects as well, it is necessary to update the hierarchies after the deformation happened.

In this project, we utilize the framework of event-based kinetic data structures for designing and analyzing new algorithms for updating bounding volume hierarchies undergoing arbitrary deformations. In addition, we apply our new algorithms and data structures to the problem of collision detection.

E-Mail: zach at cs.uni-bremen.de

|

Natural Interaction in Virtual Environments

Project member: Prof. Dr. Gabriel Zachmann, Dr. Rene Weller

Virtual reality (VR) promised to allow users to experience and work with three-dimensional computer-simulated environments just like with the real world. Currently, VR offers a lot of efficient and more or less intuitive interaction paradigms.

However, users still cannot interact with virtual environments in a way they are used to in the real world. In particular, the human hand, which is our most versatile tool, is still only very crudely represented in the virtual world. Natural manual operations, such as grasping, pinching, pushing, etc., cannot be performed with the virtual hand in a plausible and efficient way in real-time.

Therefore, the goal of this project is to model and simulate the real human hand by a virtual hand. Such a virtual hand is controlled by the user of a virtual environment via hand tracking technologies, such as a CyberGlove or camera-based hand tracking (see our companion project). Then, the interaction between such a human hand model and the graphical objects in the virtual environment is to be modelled and simulated, such that the afore mentioned natural hand operations can be performed efficiently. Note that our approach is not to try to achieve physical correctness of the interactions but to achieve real-time under all circumstances while maintaining physical plausbility.

In order to achieve our goal, we focus our research on deformable collision detection, physically-based simulation, and realistic animation of the virtual hand.

This technology will have a number of very useful applications, which can, until now, not be performed effectively and satisfactorily. Some of them are virtual assembly simulation, 3D sketching, medical surgery training, or simulation games.

This project is partially funded by DFG grant ZA292/1-1.

E-Mail: zach at cs.uni-bremen.de