Efficient Rendering of Massive and Dynamic Point Cloud Data in State-of-the-Art Game Engines

This thesis deals with point cloud rendering and a plugin for the Unreal Engine was developed, which is capable of rendering massive and dynamic point cloud data in Real-Time.

Description / Abstract

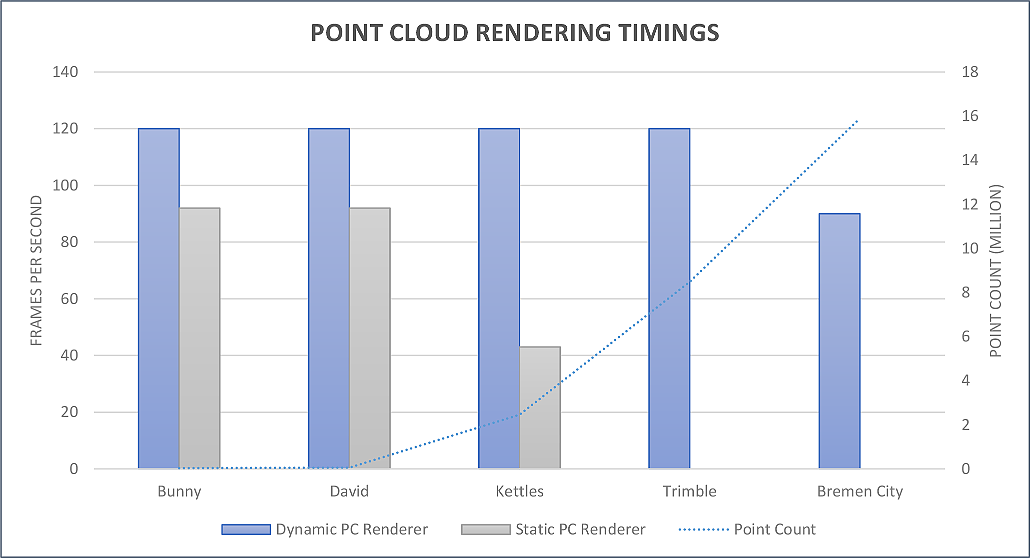

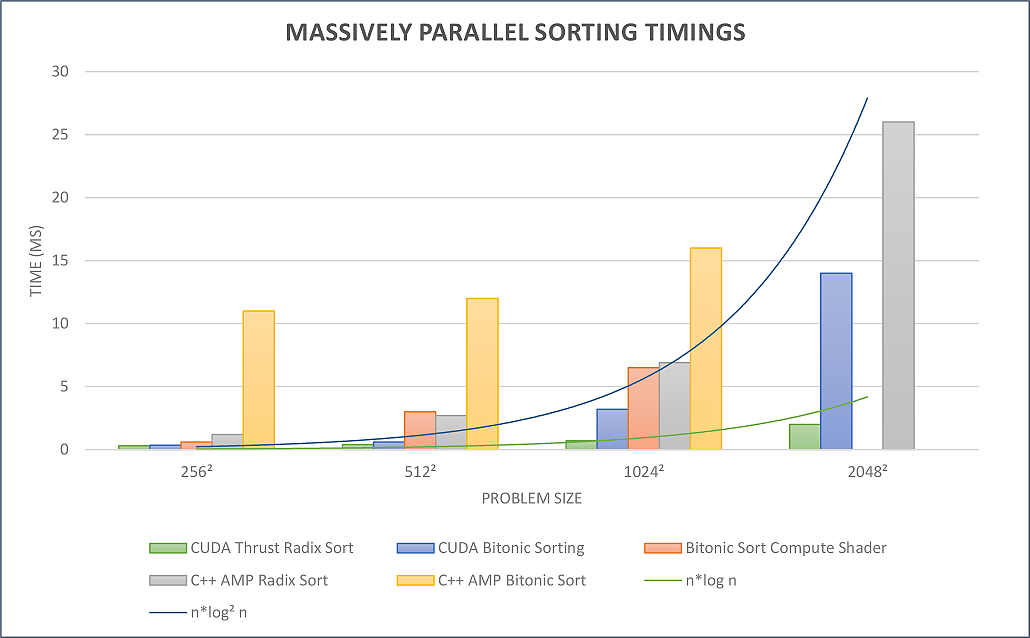

Point clouds have lately gained much popularity since professional laser scanners and consumer devices like the Microsoft Kinect have become available to a broad audience. Nowadays, point clouds are being used in a multitude of industries, like the 3D industry, architecture, robotics, and so on. At the same time, the industries rely more and more on the popular 3D graphics engines for their real-time applications, like Unity3D or Epic's Unreal game engine. However, there is just very few software and research available on how to efficiently include or implement a high-quality point cloud renderer into these polygon-based and complex state-of-the-art engines. In this thesis, I present an efficient way to implement a GPU-based point cloud renderer that is capable of rendering huge point clouds, both static and fully dynamic point clouds, in high quality and Real-Time inside the Unreal Engine. To do so, a novel way of order-independent transparency (OIT) is developed by employing a massively parallel bitonic sorting that sorts the point cloud via a compute shader. The presented renderer could be applied in various application fields, such as collaborative virtual environments (CVEs) or dynamic and on-the-fly environment scanning, which is relevant for instance in robotics. The point cloud renderer will be published as an publicly available, open source plugin for the Unreal Engine.

Results

On the one hand, a way to implement an efficient, GPU-based point cloud renderer in a polygon-based pipeline was presented. The ability to render large and dynamically changing point clouds is particularly remarkable since very few approaches focus on the rendering of dynamic point clouds. In this context, an alternative method for order-independent transparency was proposed by the parallel bitonic sorting of the point cloud's point positions texture according to the camera position. Moreover, it was shown that the combination of a scanning device (in this case the Microsoft Kinect) and a positional tracking system (in this case the HTC Vive) allows for an easy and efficient environment scanning and can make computationally expensive matching or registration of the individual point cloud parts superfluous or at least improve it. This is particularly interesting for the mapping of smaller indoor environments, for instance in robotics, where until now computationally expensive matching/registration algorithms are dominant. In practical terms, a GPU-based point cloud renderer was implemented that is capable of processing and rendering huge and fully dynamic point clouds in high quality inside the Unreal Engine.

Files

Read the full version of the master's thesis.

Download the full project with source code for UE4.21.

The current version of our Point Cloud Renderer plugin for Unreal can also be accessed on the Github repository.

The current version of the compute shader sorting plugin for Unreal can also be accessed on the Github repository.

Here is a movie that shows the point cloud renderer visualising both static and dynamic point clouds in real-time:

Collaborations

This work was performed in collaboration with the Institute of Artificial Intelligence of the University of Bremen.

License

This original work is copyright by University of Bremen.

Any software of this work is covered by the European Union Public Licence v1.2.

To view a copy of this license, visit

eur-lex.europa.eu.

The Thesis provided above (as PDF file) is licensed under Attribution-NonCommercial-NoDerivatives 4.0 International.

Any other assets (3D models, movies, documents, etc.) are covered by the

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To view a copy of this license, visit

creativecommons.org.

If you use any of the assets or software to produce a publication,

then you must give credit and put a reference in your publication.

If you would like to use our software in proprietary software,

you can obtain an exception from the above license (aka. dual licensing).

Please contact zach at cs.uni-bremen dot de.