Hand Detection and Determination of their Orientation in Depth Images in the Field of Surgery

This bachelor's thesis deals with methods for real-time recognition and determination of the orientation of the hands in depth image recordings of an operation. In the future, the detection of the hands should signal phases in which the OR staff is working concentrated on the site and contribute to the calculation of a focus point of the OR staff. This information is a useful extension for the the project Autonomous Surgical Lamps (ASuLa) of the University of Bremen. Approximately 1200 images will be processed and labeled from the acquired material, which will be used for the training of two different models based on Convolutional Neural Networks. The models differ in the determination of the orientation. On the one hand, the length of the vectors of a vector representation is predicted and on the other hand detections are assigned to a span in orientation.

Description

For the realization of the project 23 recordings are available, which are recorded by a Microsoft Kinect v2.

The camera is located on the ceiling of the operating room and records the RGB data and the depth images.

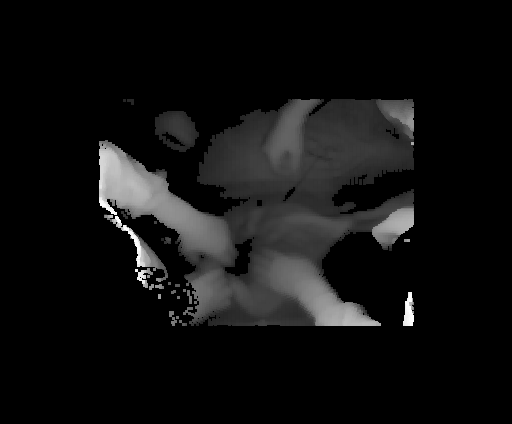

However, due to the overexposure of the situs, the image details near the situs are lost. Therefore only the depth

data is used in the recognition.

To put the focus of the recognition more on the situs and to emphasize the

hands more, a data preprocessing is performed on the data. This consists of the following steps: Cutting out

superfluous areas, normalizing the image, applying dilation. Finally, the data is randomly augmented to generate a

more diversified data set. For hand detection, a special architecture Convolutional Neural Networks: the

Single Shot Detector (SSD) is used. This special architecture is particularly time-efficient in detection.

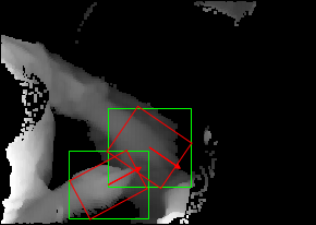

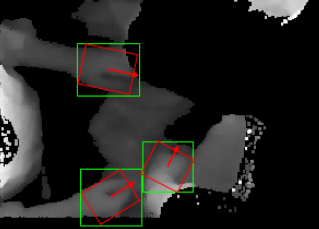

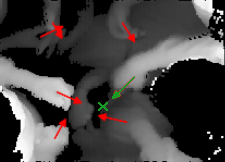

Two different methods are used to determine orientation: Classifying a range and predicting the vectors.

In the first method, the SSD classifies one of 20° ranges for each detected hand. In the other method, the hand is

represented by two vectors. The SSD predicts the orientation by predicting the horizontal and vertical components

of the vectors.

The two methods result in two different models, which are trained on the same data set.

Results

The evaluation of the models shows that the model based on regression of the vectors achieves a better detection performance than the other model. Especially in recall the regression model achieves a significantly higher value than the classification model. This is due to the fact that neighboring classes in the classification system are visually very similar. Therefore a reliable prediction is difficult for the classification model. Furthermore it can be seen that in the determination of the orientation both models correctly determine the majority of the detections with a deviation of less than 30° (classification model: 98.03%, regression model: 97.58%).

The regression model requires a significantly shorter run-time (10 ms) in the prediction than the classification

model (55.3 ms). This is largely related to the architectural differences between the models, such as the choice of

a feature extractor. It is conceivable that both models would require similar runtimes for a prediction if the

architectural decisions were made similarly.

From the points and the alignment, a possible focus point of the surgeon can be calculated prototypically. This

can be implemented, for example, by extending the vector of the hand to which it points.

Files

Full version of the bachelor's thesis (German only)

Videos

License

This original work is copyright by University of Bremen.

Any software of this work is covered by the European Union Public Licence v1.2.

To view a copy of this license, visit

eur-lex.europa.eu.

The Thesis provided above (as PDF file) is licensed under Attribution-NonCommercial-NoDerivatives 4.0 International.

Any other assets (3D models, movies, documents, etc.) are covered by the

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To view a copy of this license, visit

creativecommons.org.

If you use any of the assets or software to produce a publication,

then you must give credit and put a reference in your publication.

If you would like to use our software in proprietary software,

you can obtain an exception from the above license (aka. dual licensing).

Please contact zach at cs.uni-bremen dot de.