Autonomous Surgical Lamps

Note: This is the predecessor project of SmartOT. For research interests in this area, please contact the SmartOT project.

Autonomous Surgical Lamps

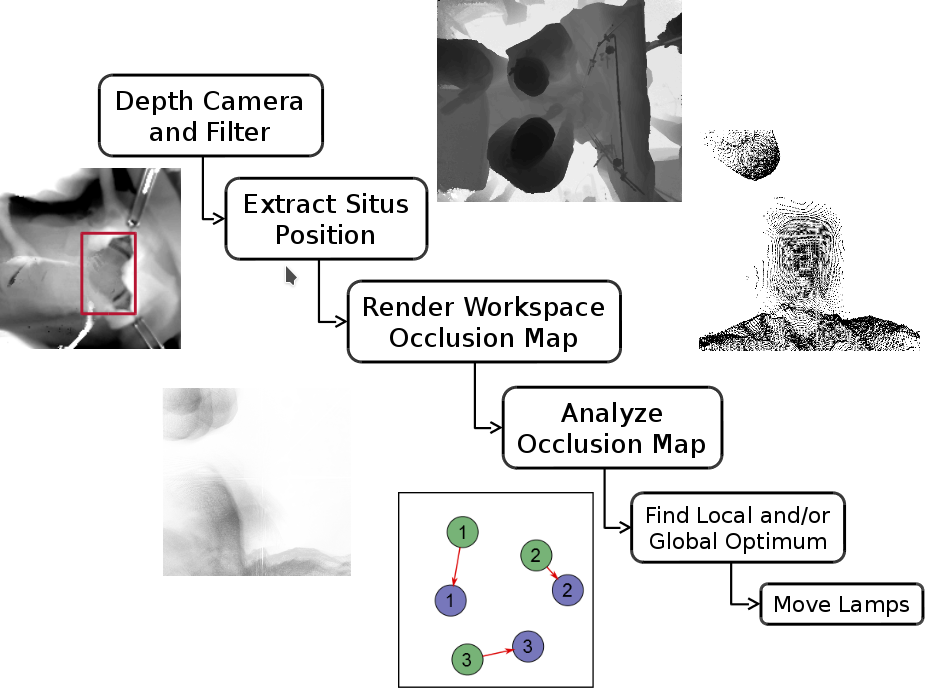

As part of the Creative Unit - Intra-Operative Information, we are developing algorithms for the autonomous positioning of surgical lamps in open surgery. These algorithms work solely on the input of one depth camera, which is positioned above the patient during the surgery. The algorithms identify the operation site (aka the situs) and all possible occlusions. They then move the lamps to avoid occlusions and collisions while optimizing for the least amount of movement feasible over time.

The basic idea is to take the point cloud given by the depth camera and render it from the perspective of the situs towards the working space of the lamps above the operating table. Out of this rendering, we directly get the information, which parts of the lamps workspace are occluded and which not. To be able to minimize the movement over time, we also use information about past occlusions and movements to position the lamps in areas, that are most likely to not be occluded in the future. We arranged the algorithms in a pipeline, which takes the depth image of the depth camera as input, analyzes it to find the situs, and at last outputs the current optimal positions for a given set of lamps.

|

|

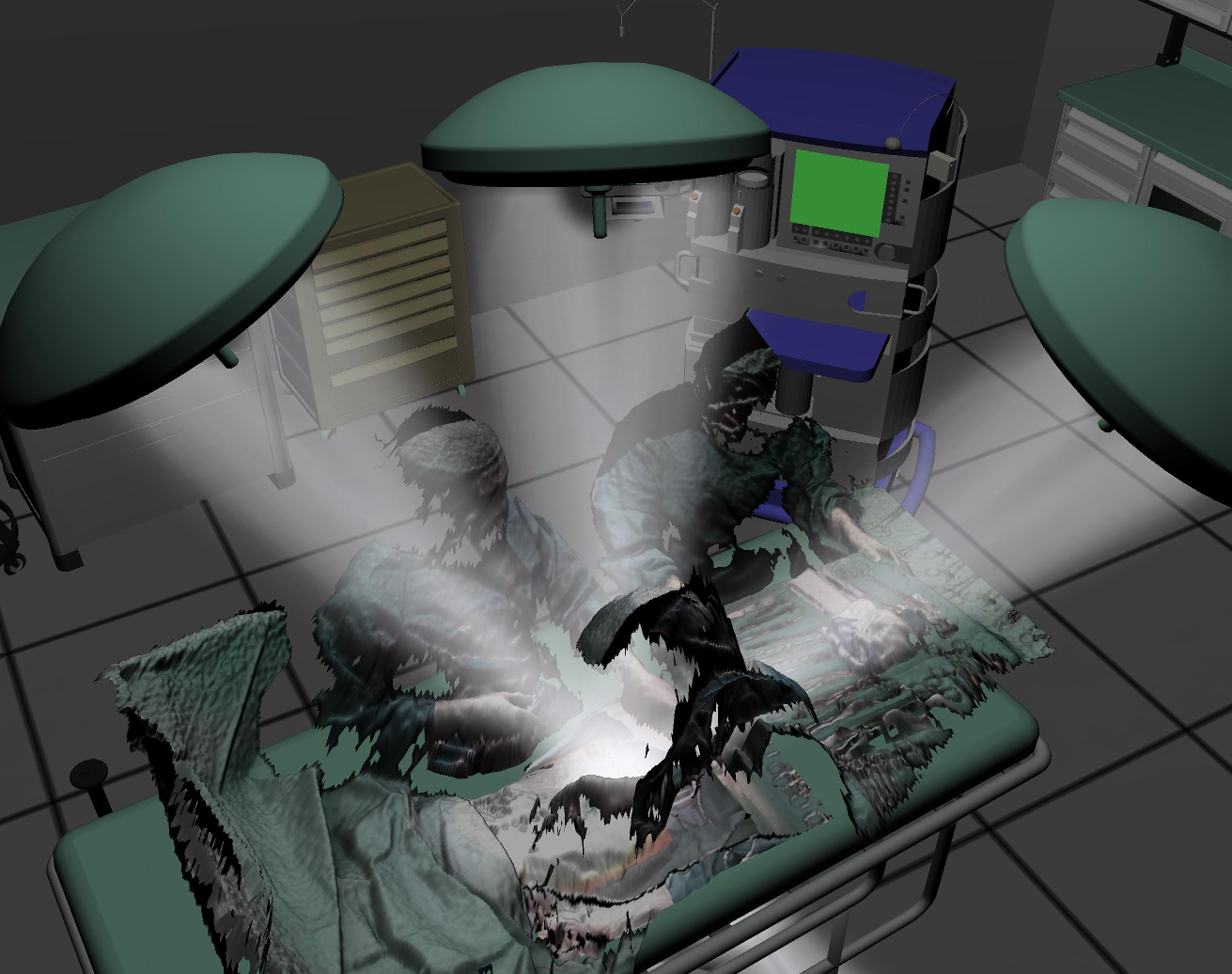

Our pipeline with illustrations of the outputs of some stages (left). A Screenshot of our testing and visualization environment with input data from a real surgery (right).

Publications

- Autonomous Surgical Lamps, Jahrestagung der Deutschen Gesellschaft für Computer- und Roboterassistierte Chirurgie (CURAC), 2015. [BibTex]

- Optimized Positioning of Autonomous Surgical Lamps, SPIE Medical Imaging, Orlando, FL, USA, February 11 - 16, 2017. [BibTex]

Videos on Youtube

|

|

| Download the video: ASuLa_inDepth.mp4 |

Video on local TV station

|

Download the video: ButenUnBinnen_CU-IOI.mp4 Source: Radio Bremen, Buten un Binnen |

Towards Physically Correct Lighting Simulation

One important part of improving the lighting on the surgical site is to be able to estimate the expected illumination of the site with different positions of the lamps. In computer graphics, ray-tracing is used to provide photo-realistic renderings of arbitrary scenes. Ray-tracing can simulate the light in a physically correct manner, which is why we chose it for our project. Unfortunately, we only have point-clouds as representation of the scene which are harder to ray-trace. So to be able to quickly evaluate different configurations of lamp positions we developed fast ray-tracing-methods for point-clouds on the basis of NVidia's OptiX ray tracing engine. A video of this in action can be seen below.

|

Download the video: Raytraced_Pointclouds.mp4 |

RGB-D Data

The following downloadable data is a recording of a complete, open, abdominal surgery. It was recorded using a Microsoft Kinect v2, which was mounted directly above the patient, using a costom recording program. The recordings are stored in HDF5-files which can be read using the appropriate HDF5 library and the following C++ class: header, source. They are also compressed using gzip to preserve server space. Each file contains at most 27000 frames, which corresponds to roughly 16-17 minutes of recording.

This data may only be used for scientific purposes. If you publish research which is using this material, cite the above paper. Also, please contact us, we are always interested to hear what others are doing with this data.

- output_2016-02-04_09-34.h5.gz Compressed file size: 17GB

- output_2016-02-04_09-34.h5.gz Compressed file size: 5.4GB

- output_2016-02-04_09-55.h5.gz Compressed file size: 25GB

- output_2016-02-04_10-10.h5.gz Compressed file size: 19GB

- output_2016-02-04_10-26.h5.gz Compressed file size: 2.9GB

- output_2016-02-04_10-29.h5.gz Compressed file size: 9.2GB

- output_2016-02-04_10-44.h5.gz Compressed file size: 380MB

- output_2016-02-04_11-21.h5.gz Compressed file size: 10GB

- output_2016-02-04_11-37.h5.gz Compressed file size: 13GB

- output_2016-02-04_11-52.h5.gz Compressed file size: 16GB

- output_2016-02-04_12-08.h5.gz Compressed file size: 8.7GB

- output_2016-02-04_12-24.h5.gz Compressed file size: 6.5GB

- output_2016-02-04_12-40.h5.gz Compressed file size: 7.8GB

- output_2016-02-04_12-57.h5.gz Compressed file size: 3.6GB

- output_2016-02-04_13-23.h5.gz Compressed file size: 2.5GB

- output_2016-02-04_13-40.h5.gz Compressed file size: 8.9GB

- output_2016-02-04_13-57.h5.gz Compressed file size: 1.6GB

- output_2016-02-04_14-14.h5.gz Compressed file size: 85MB

- output_2016-02-04_14-31.h5.gz Compressed file size: 15GB

- output_2016-02-04_14-48.h5.gz Compressed file size: 12GB

- output_2016-02-04_15-03.h5.gz Compressed file size: 13GB

- output_2016-02-04_15-19.h5.gz Compressed file size: 4.4GB

- output_2016-02-04_15-34.h5.gz Compressed file size: 2.4GB

As this is real-life data, there are long stretches of the recording, where a surgical lamp obstructs most of the kinects field of view. As a rule of thumb, the smaller the compressed file size, the bigger and longer the obstruction of the field of view.

This work was partially supported by the grant Creative Unit - Intra-Operative Information.

License

This original work is copyright by University of Bremen.

Any software of this work is covered by the European Union Public Licence v1.2.

To view a copy of this license, visit

eur-lex.europa.eu.

The Thesis provided above (as PDF file) is licensed under Attribution-NonCommercial-NoDerivatives 4.0 International.

Any other assets (3D models, movies, documents, etc.) are covered by the

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To view a copy of this license, visit

creativecommons.org.

If you use any of the assets or software to produce a publication,

then you must give credit and put a reference in your publication.

If you would like to use our software in proprietary software,

you can obtain an exception from the above license (aka. dual licensing).

Please contact zach at cs.uni-bremen dot de.