Grasp Type Detection

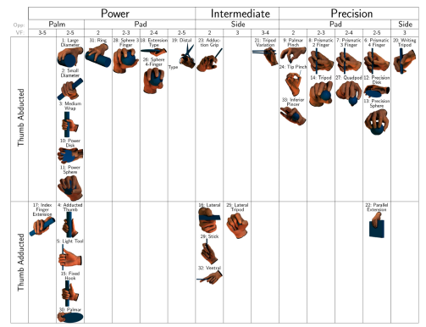

The grasp types we want to distinguish are those defined in the GRASP taxonomy. Similar grips are grouped together and are essentially differentiated by the size and shape of the grasped objects. We liked this classification because we expect the addition of more objects in the future of the Virtual Cooking project and we believe it will make it will be easier for us to add the new grips resulting from those.

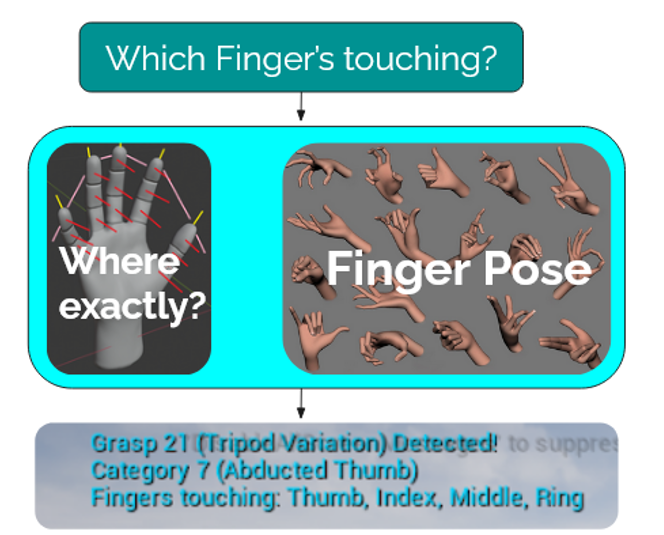

The Grasp Type Detection consists of two main steps. The first step is detecting which fingers are touching the object for which we apply raycasting. The next step is having a closer look to where exactly the fingers are touching the object (from the side, the whole finger or only the finger tips), for which we shoot rays from the very top of the fingers, in between, and from each joint facing the palm of the hand. Additionally we take the finger pose into account (is a finger curved or straight, is the finger touching the palm) that for we calculated the rotation angle thresholds for those positions. The output of our implementation states the name and the number given by the GRASP taxonomy, as well as the group number we assigned following the groups in that classification and as it's the most important distinction in the GRASP taxonomy also the thumb orientation (adducted or abducted). As it's the most important information in our detection we round up the output with the fingers which are touching the object.

Grasp Taxonomy

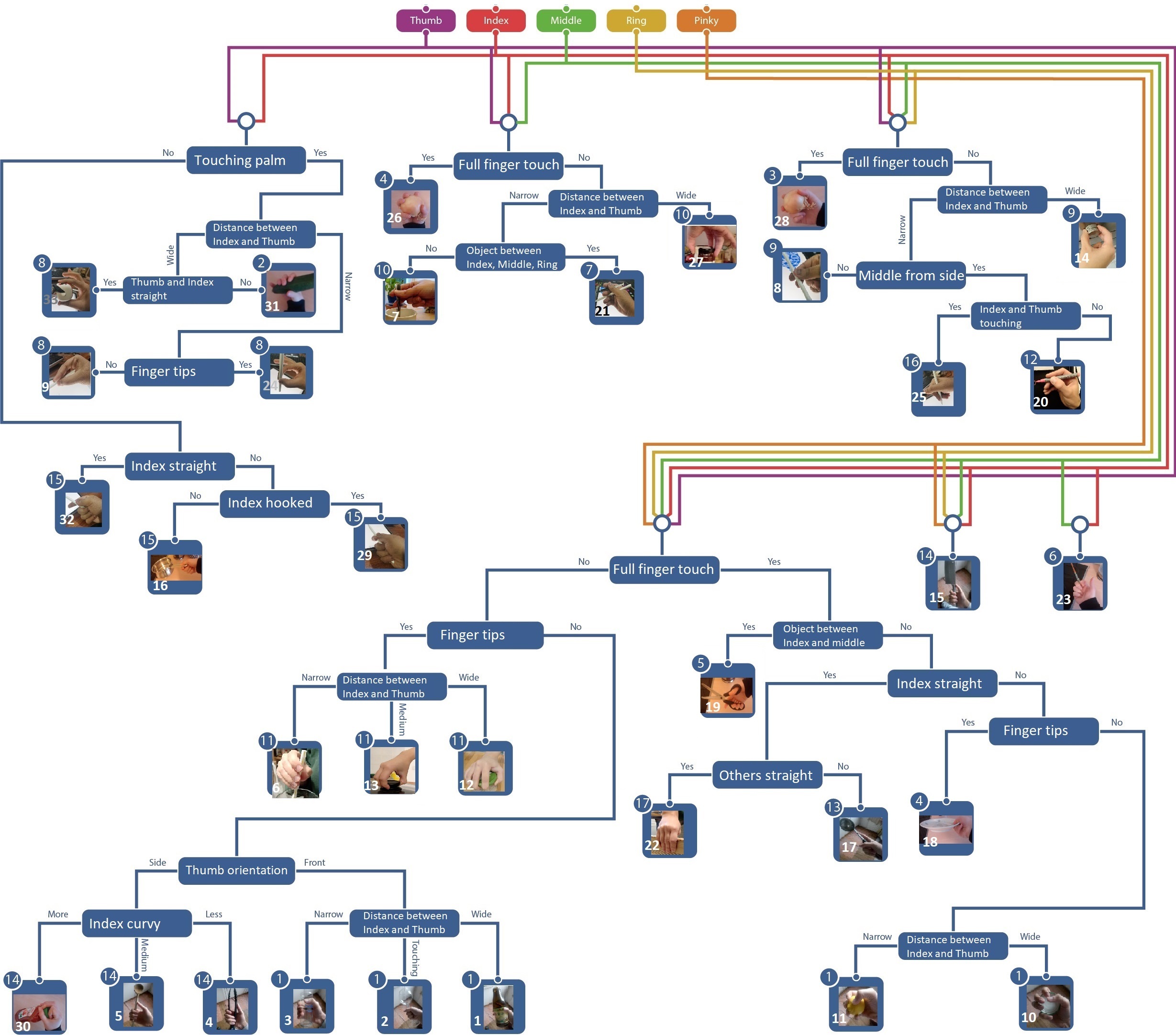

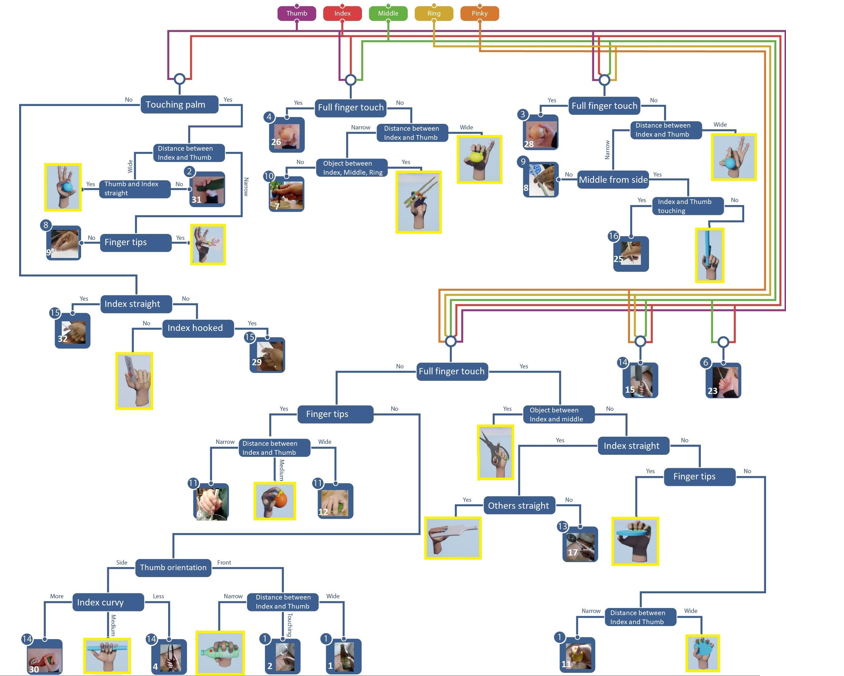

To test out whether the GRASP taxonomy really holds up with the kitchen application we created the grasps in our real kitchen environments. These photos we used in our algorithm overview. We distinguish between the grasps by following this decision tree. Starting from the combination of fingers touching the object and as mentioned abouve following with discriminations accounting where the fingers touch the object or the finger pose.

Future Work

Future work we foresee is a more extensive data gathering once the broken cyber glove sensors

are fixed, which could be used for improving our rotation angle thresholds for finger pose

with machine learning as well as testing our detection on a number of participants. So

far our data has been gathered in our cyber glove simulation causing the input to be always the

same. The test hand had it's standard size and it's movements were also restricted to small

intervalls. Two things that would definetly differ when a variety of people would perform

these grasps in the kitchen environment.

Another aspect that would be interesting is the combination of the grasp type detection with

the heatmaps and forcemaps into one application. To unite the used techniques therefor it

could make sense to switch the raycasting used for detecting fingers touching the object with

another form of collision detection: The inner sphere tree colliders originating here in the

CGVR group.

Here you can find the results of our work (the readme files in each folder contain more detailed information):

- Our repository

holds all

our results.

- Gathered data (Cyber Glove data gathering, lab experience, Simulated data, data analysis,

modelled kitchen items, data set planning, spheres for collision detection, data analysis with

grasp seeds, results of our detection):

Click

Here

- Further documentation (Algorithm planning, Raycasting and GRASP taxonomy application):

Click

Here

- Grasp Type Detection for Cyber Glove 3 (how it's used, which functions are available and how

to configure the keyboard controller):

Click

Here

- Recorder for Cyber Glove 3:

Click

Here

- Documentation of open tasks for future work:

Click

Here

- Tutorials we wrote over the course of the project:

Click

Here