KaNaRiA

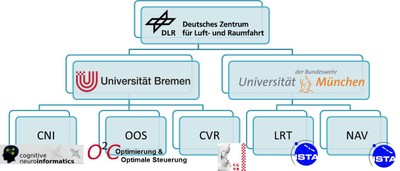

KaNaRiA (from its German acronym: Kognitionsbasierte, autonome Navigation am Beispiel des Ressourcenabbaus im All) is a collaborative project of the University of Bremen (institute for computer graphics and virtual reality, institute for cognitive neuroinformatics, institute for optimisation and optimal control) and the Universität der Bundeswehr in Munich (institute of space systems and institute of space navigation ) financed by the German Aerospace Centre (DLR - Deutsches Zentrum für Luft- und Raumfahrt). The terrestrial follow-up project of KaNaRiA, AO-Car, researches novel autonomous car manoeuvres.

The extraction of asteroid resources is of high interest for a great number of upcoming deep space missions aiming at a combined industrial, commercial and scientific utilization of space. One main technological enabler or mission concepts in deep space is on-board autonomy. Such mission concepts generally include long cruise phases, multi-body fly-bys, planetary approach and rendezvous, orbiting in a-priori unknown dynamic environments, controlled descent, surface navigation and precise soft landing, docking or impacting.

|

The project comprises two major goals:

- To perform a feasibility study of an autonomous asteroid mining mission

- To build a software mission simulator to serve as platform for the development, test and verification of autonomous spacecraft navigation algorithm

In summary, our features for the KaNaRiA are:

- novel wait-free software infrastructure, for realtime interactive systems

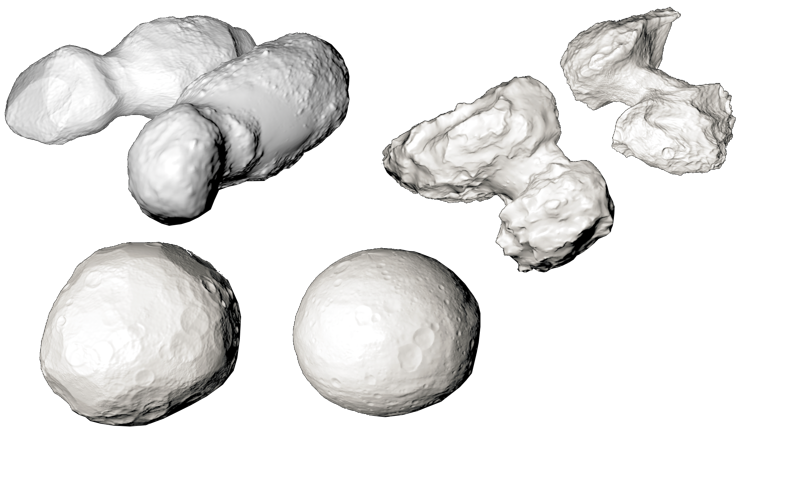

- toolchain for procedurally generating asteroid shapes and surface textures

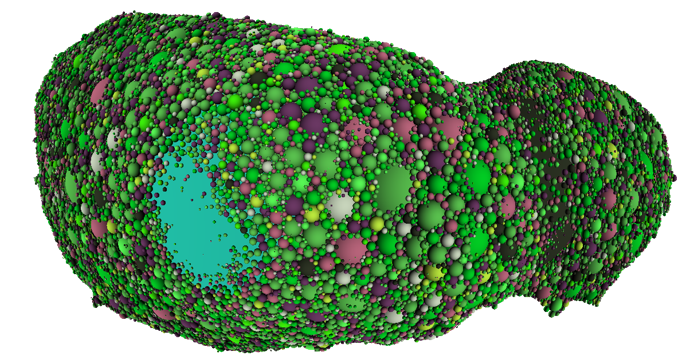

- novel sphere-packing based gravity field modelling for homogeneous and inhomogeneous mass distributions and GPGPU based computation

- immersive and interactive assembly of the KaNaRiA spacecraft design in Unreal Engine

- raytracing library in Ogre3D for synthesizing camera and lidar data

- artistically enhanced spacecraft models

- visualization of the simulation in Ogre3D

- promotional pictures and videos for illustrating the project

Related Publications

- Fast and Accurate Simulation of Gravitational Field of Irregular-shaped Bodies using Polydisperse Sphere Packings, ICAT-EGVE 2017, Adelaide, Australia, November 22 - 24, 2017 [BibTex]

- Invariant Local Shape Descriptors: Classification of Large-Scale Shapes with Local Dissimilarities, Computer Graphics International 2017, Yokohoma, Japan, June 27 - 30, 2017 [BibTex]

- GDS: Gradient based Density Spline Surfaces for Multiobjective Optimization Arbitrary Simulations, ACM SIGSIM PADS 2017, Singapore, May 24 - 26, 2017 [BibTex]

- Intelligent Realtime 3D Simulations, ACM SIGSIM PADS 2016, Banff, May 15 - 18, 2017 [BibTex]

- Knowledge Discovery for Pareto based Multiobjective Optimization in Simulation, ACM SIGSIM PADS 2016, Banff, May 15 - 18, 2017 [BibTex]

- GraphPool: A High Performance Data Management for 3D Simulations, ACM SIGSIM PADS 2016, Banff, May 15 - 18, 2017 [BibTex]

- Wait-Free Hash Maps in the Entity-Component-System Pattern for Realtime Interactive Systems, IEEE VR: 9th Workshop on Software Engineering and Architectures for Realtime Interactive Systems SEARIS 2016, Greenville, SC, USA, March 19 - 23, 2016 [BibTex]

- Kanaria: Identifying the Challenges for Cognitive Autonomous Navigation and Guidance for Missions to Small Planetary Bodies, Jerusalem, Isreal, October 12 - 16, 2015

- Multi Agent System Optimization in Virtual Vehicle Testbeds, EAI SIMUtools, Athens, Greece, Portland, August 24 - 26, 2015 [BibTex]

- Scalable Concurrency Control for Massively Collaborative Virtual Environments, ACM Multimedia Systems - Massively Multiuser Virtual Environments, Portland, United States, March 18 - 20, 2015 [BibTex]

- Virtual Reality for Simulating Autonomous Deep-Space Navigation and Mining, 24th International Conference on Artificial Reality and Telexistence (ICAT-EGVE 2014), Bremen, Germany, December 8 - 10, 2014 [BibTex]

- A Framework for Wait-Free Data Exchange in Massively Threaded VR Systems, International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision (WSCG)), Plzen, Czech Republic, June 2 - 5, 2014. [BibTex]

Invited Talks

Videos

|

Our KaNaRiA image movie, illustrating the main concepts of the KaNaRiA projects. |

Our visualization of the used particle filter for spacecraft localization. |

|

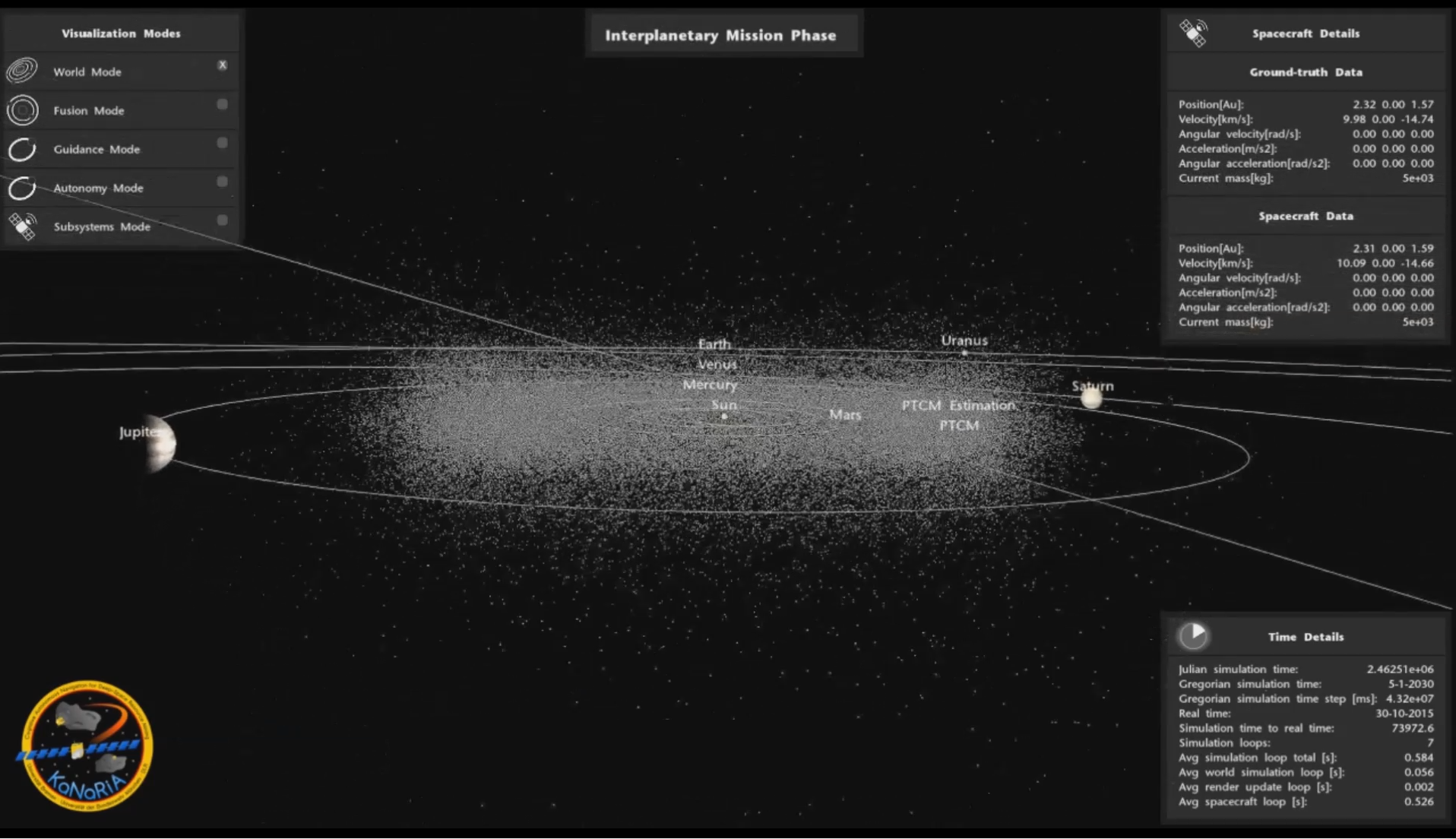

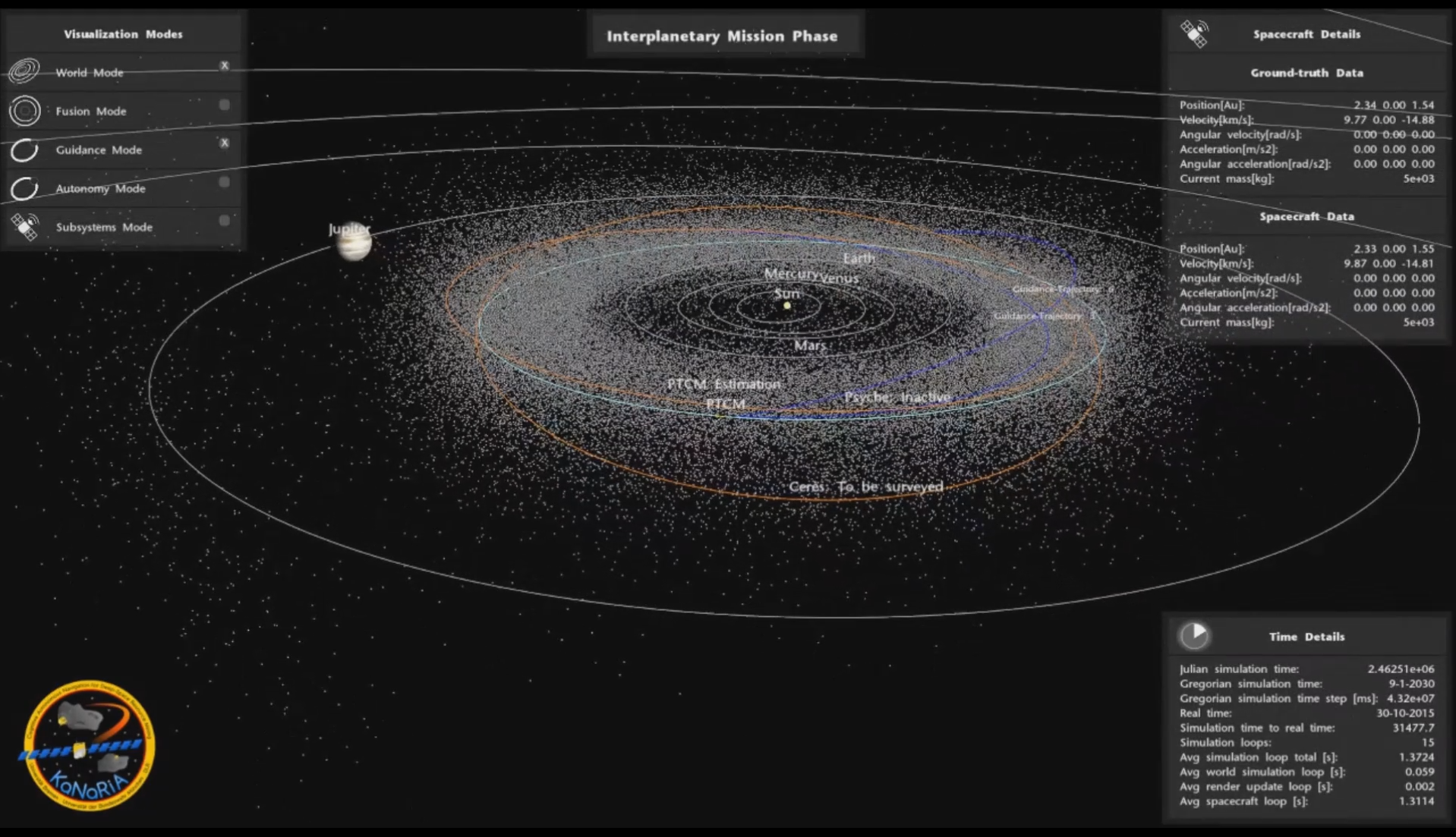

Our visualization of the asteroid main belt and the corresponding cruise phase operations: optimal trajectories are flown by the spacecraft. |

Our visualization of the asteroid main belt and the corresponding cruise phase operations, showcasing the rendering of 200,000 asteroids. |

|

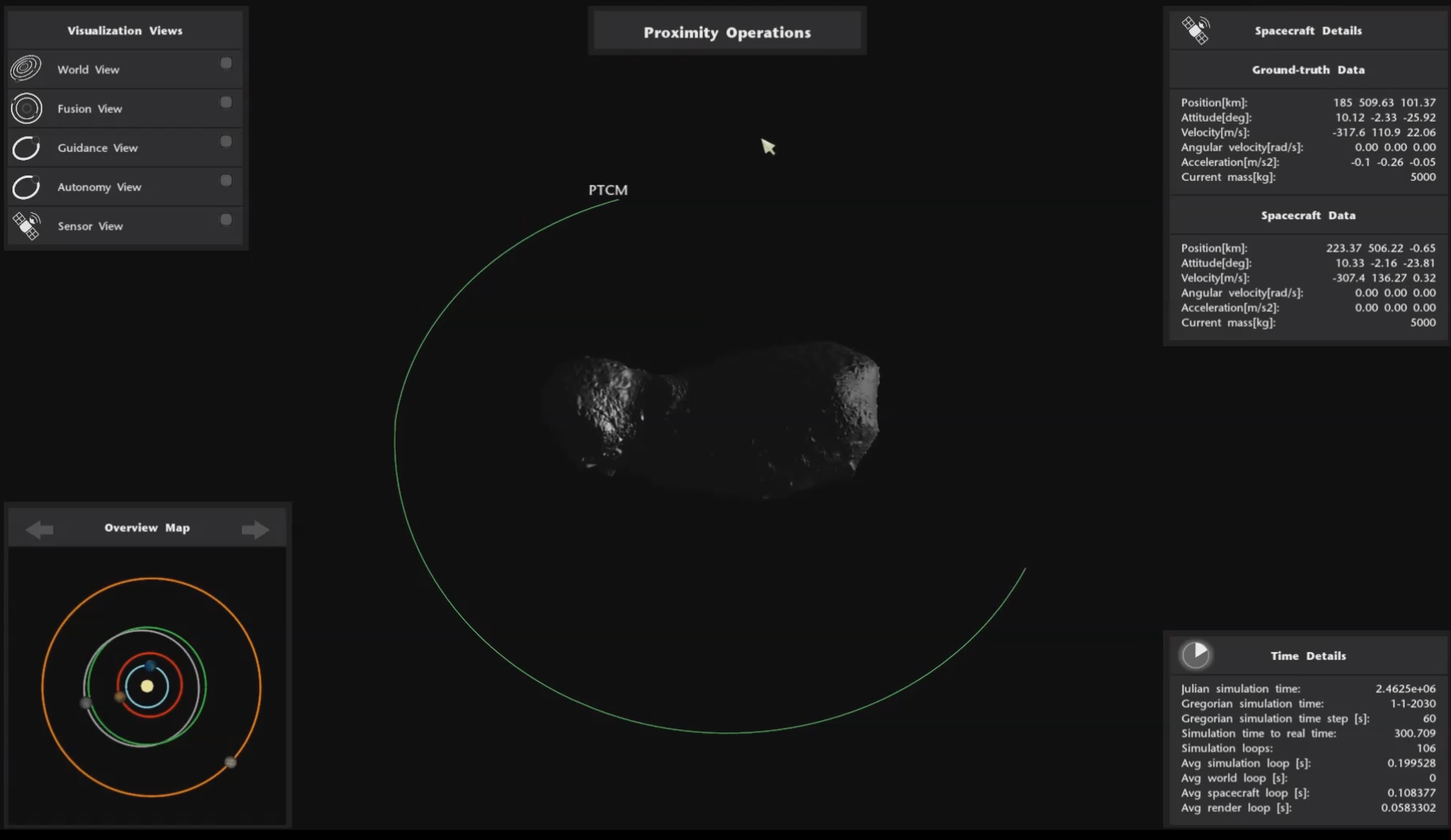

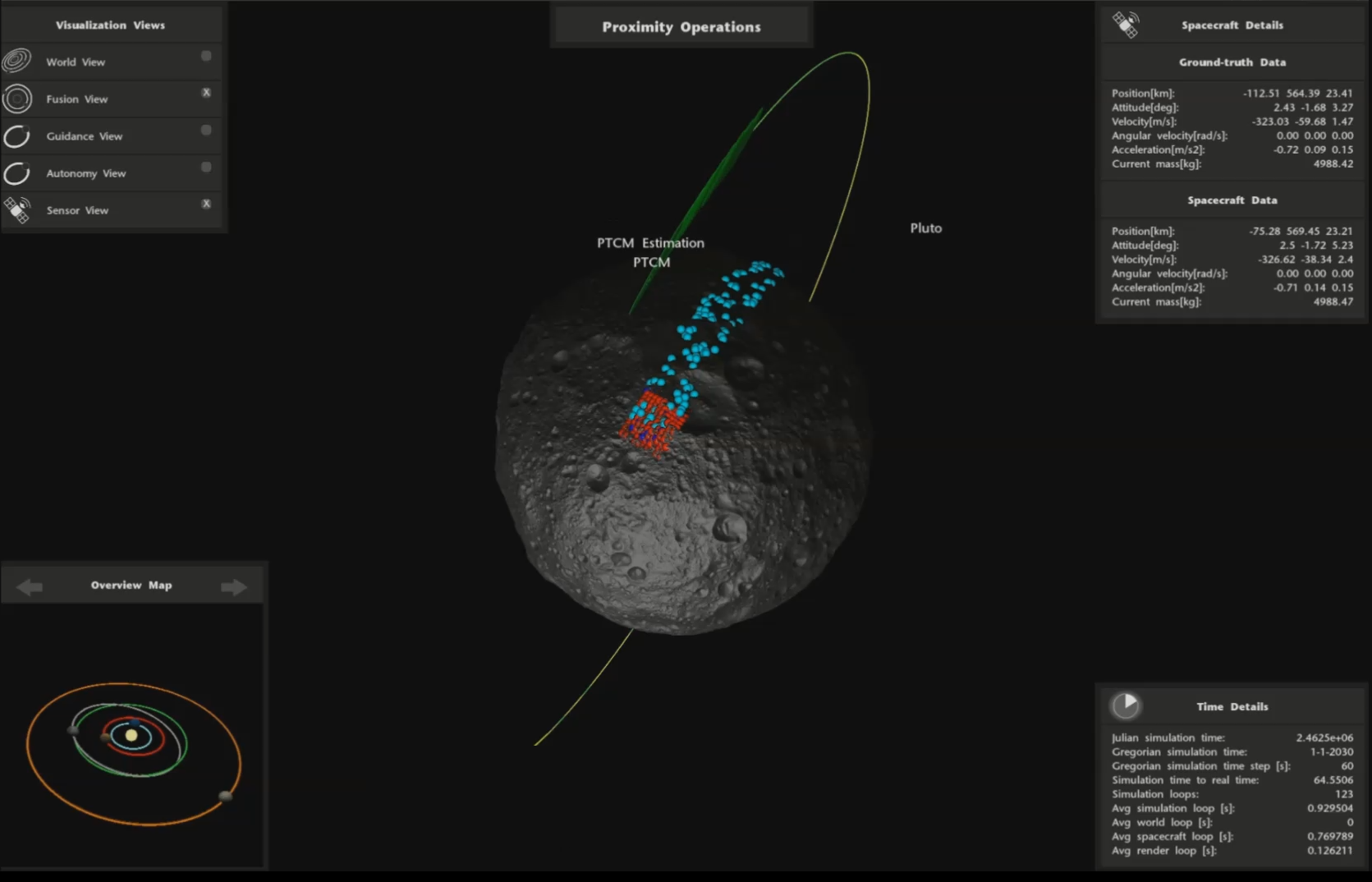

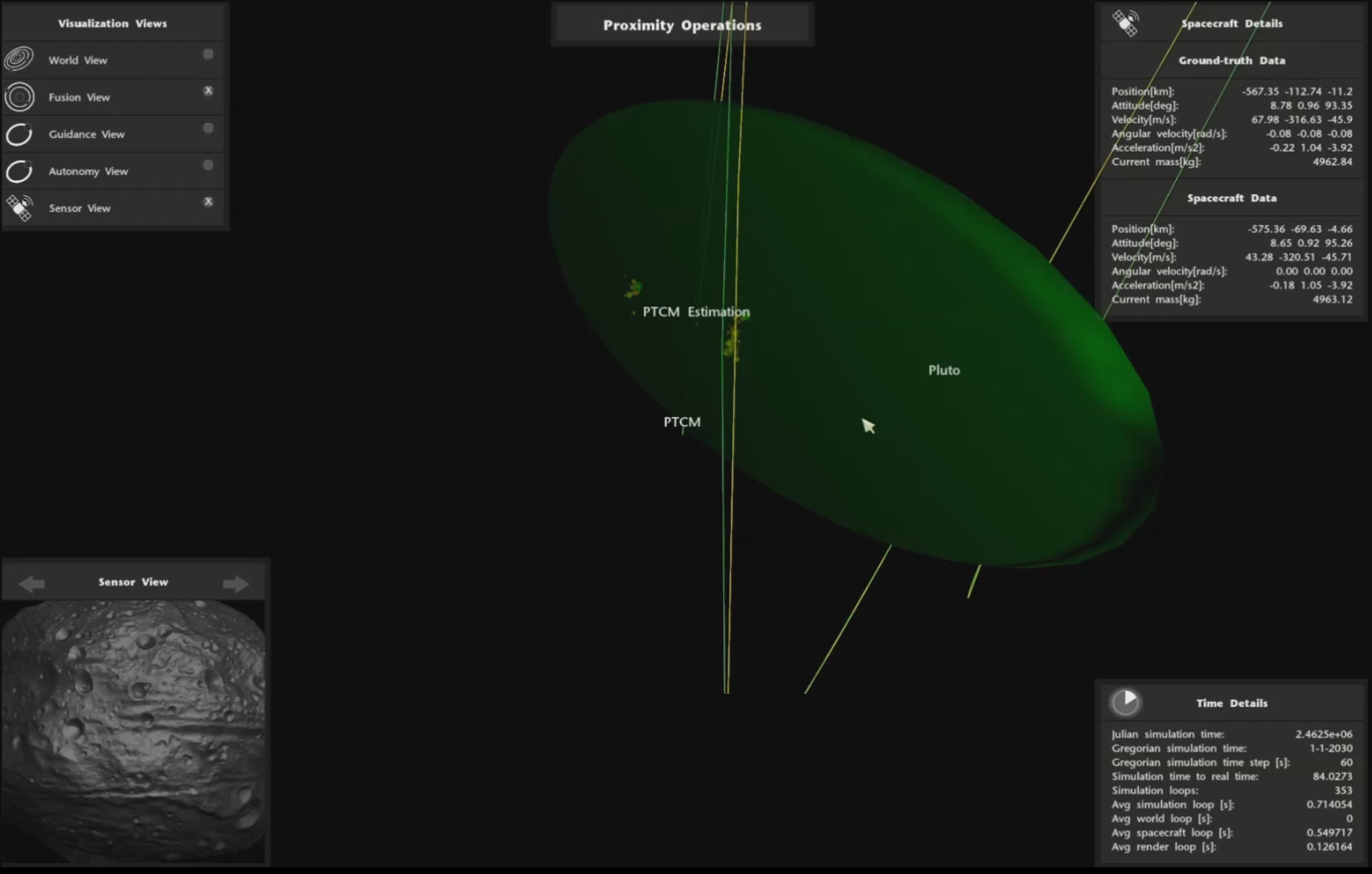

Proximity phase operations: visualizing the SLAM approach from the institute for cognitive neuroinformatics. |

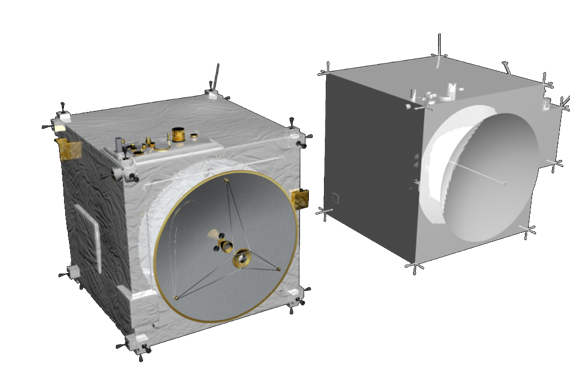

Our demo overview of the PTCM spacecraft concept, done by the Universität der Bundeswehr München. |

|

Our artistic visualization of the ReDoLa landing sequence. |

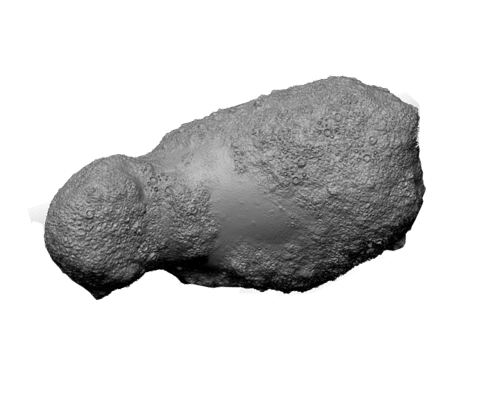

Our procedurally generated asteroids for arbitrary simulation purposes. |

|

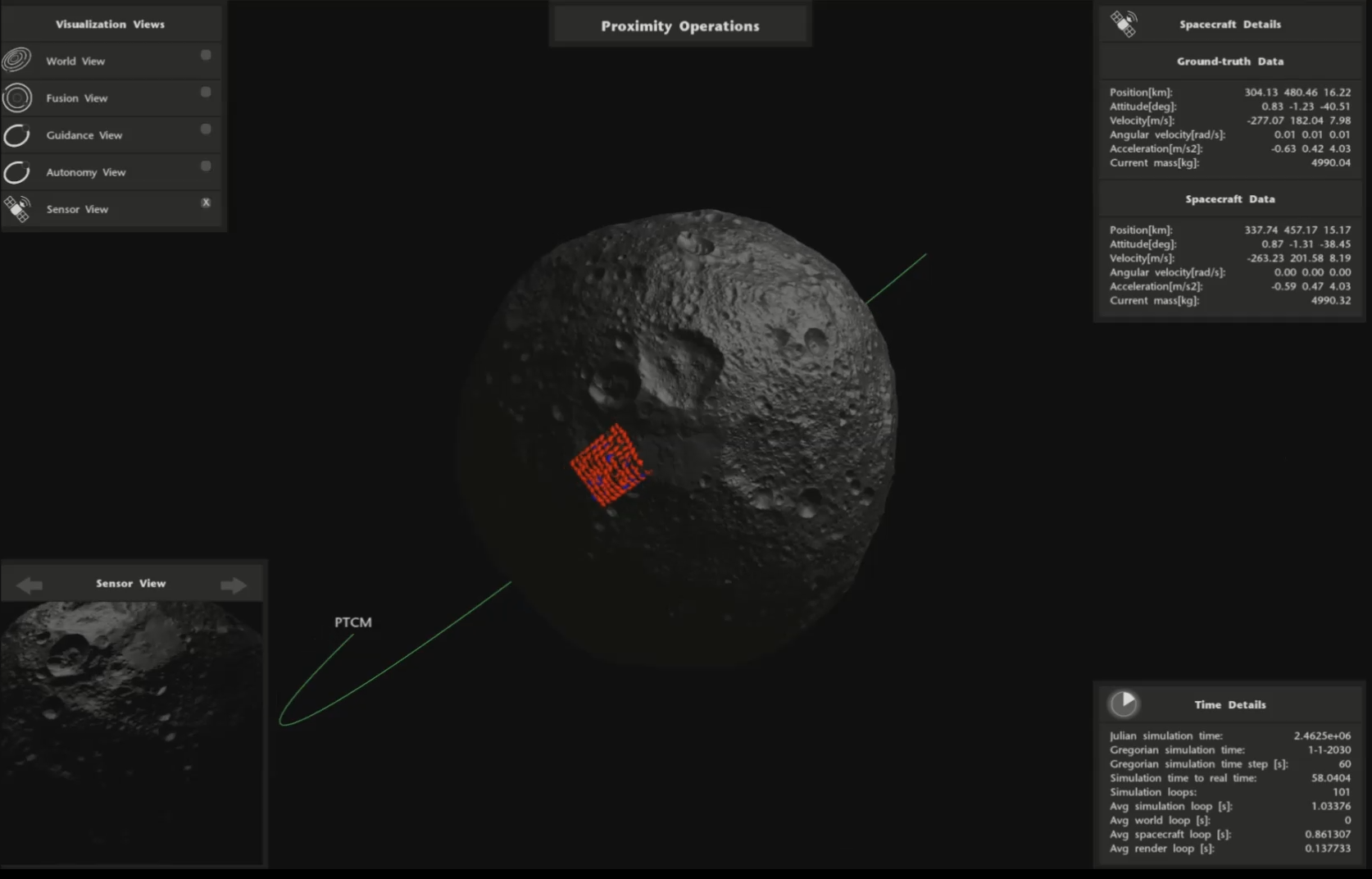

Using our procedurally generated asteroids, camera images for asteroid approaches can be synthesized (close approach). |

Using our procedurally generated asteroids, camera images for asteroid approaches can be synthesized (far approach). |

| Using our procedurally generated asteroids, camera image for asteroid approaches can be synthesized (proximity). |

Early demo of the cruise phase, illustrating optimal trajectory computation and spacecraft localization |

Images

|

|

Our artistically enhanced PTCM model (left) based on the original design. | Our artistically enhanced Itokawa model. The model is based on the original Itokawa data. |

|

|

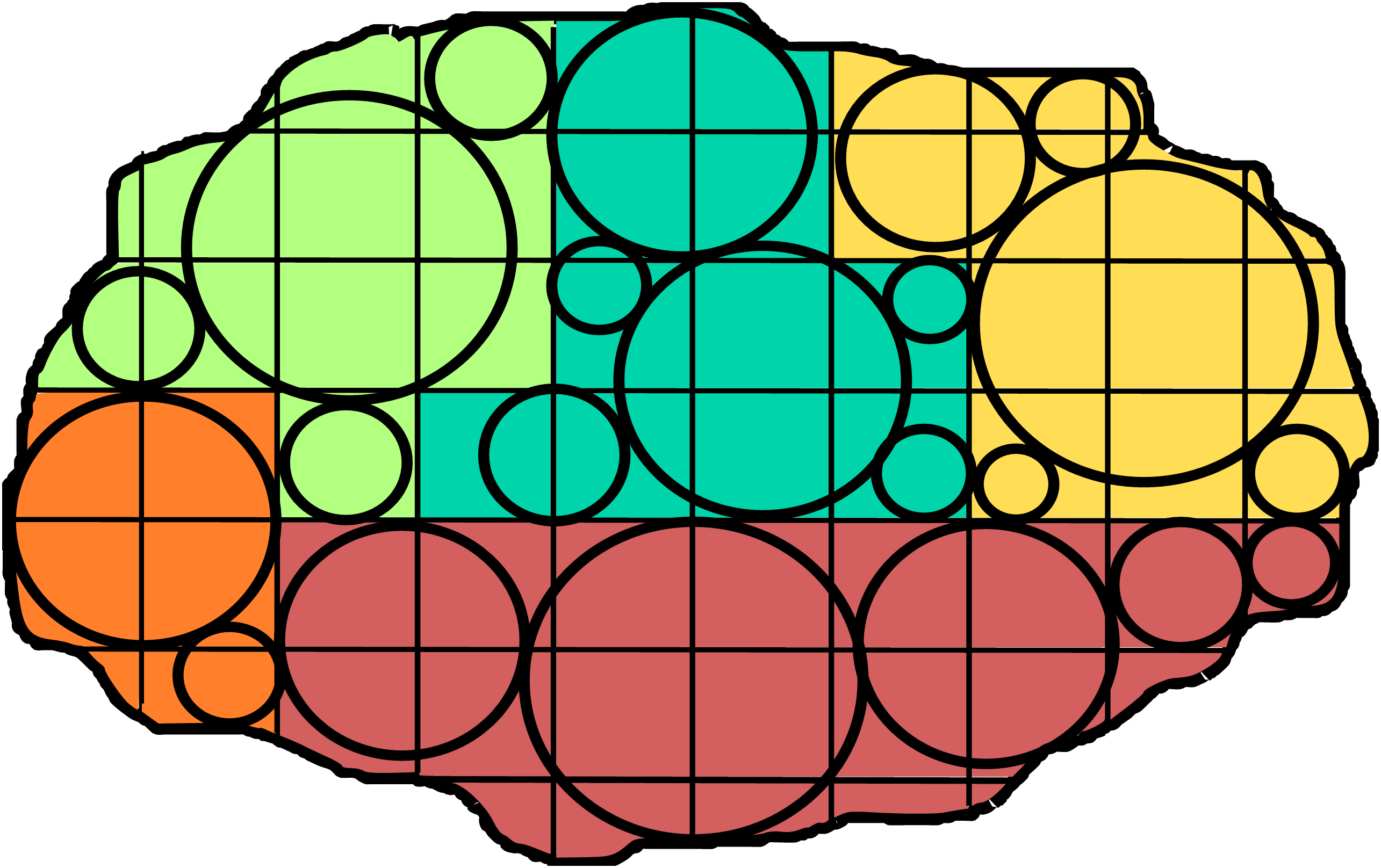

| Itokawa sphere packing for gravity computation and material distribution of asteroids. | Illustration of our sphere-packing concept: arbitrary mass distributions can be approximated with uniform spheres each with different masses. |

|

|

|

|

|

Illustration of the proximity operations, the flown trajectory is shown in green. |

Visualizing lidar measurements (reds) in the proximity operation simulation. |

|

|

Visualizing surface landmarks (light blue) and lidar measurements (red) in the proximity operation simulation. |

Visualizing the uncertainty of the spacecraft SLAM approach as a transparent sphere. |

Related theses

-

Bachelor thesis: Echtzeitsimulation von Staubpartikeln für Asteroiden (finished).

-

Master thesis: A Programming Language for Procedural Content Generation (finished).

-

Master thesis: Texture Synthesis for Generating Realistic Asteroid Surfaces (finished).

-

Master thesis: Procedural Generation of Asteroids - working title (ongoing).