Real-time camera-based 3D hand tracking

Hand tracking has applications in many fields, for example for navigation in

virtual environments, virtual prototyping, gesture recognition, and motion capture.

The goal of this project is to track the global position and all finger joint angles of a human hand in real-time.

Due to measurement noise, occlusion, cluttered background, inappropriate illumination, high dimensionality, and

real-time constraints, hand-tracking is a scientific challenge.

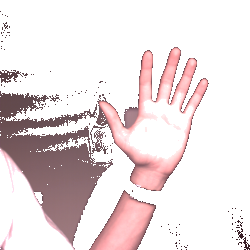

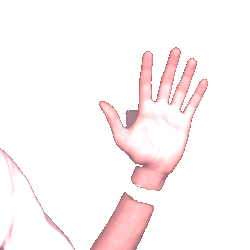

We use multiple cameras to capture images of the hand from different directions.

Features like skin segmentation, edge detection, skin texture, and previous hand position can be used to extract

the 2D shapes of the hand in the images. We utilize dimension reduction techniques

to cope with the high complexity of the tracking problem (the hand has about 21 local DOFs

and 6 global DOFs).

The following figure shows the overall architecture of our system.

Poster

This

poster illustrates the main steps of the tracking algorithm.

Publications

- Model-Based High-Dimensional Pose Estimation with Application to Hand Tracking Dissertation 2012 (Staats- und Universitätsbibliothek Bremen)

- A Comparative Evaluation of Three Skin Color Detection Approaches

In GI AR/VR Workshop 2012, Düsseldorf, Germany, September 2012

- Segmentation-Free, Area-Based Articulated Object Tracking

ISVC 2011 (7th International Symposium on Visual Computing), Las Vegas, Nevada, USA, October 2011

- FAST: Fast Adaptive Silhouette Area based Template Matching

BMVC 2010 (British Machine Vision Conference 2010), Aberystwyth, UK, August-September 2010

- Silhouette Area Based Similarity Measure for Template Matching in Constant Time

AMDO 2010 (6th International Conference of Articulated Motion and Deformable Objects), Port d'Andratx, Mallorca, Spain, July 2010

- Erratum: Eq. 5 does not take into account all rectangle configurations, i.e. we do not obtain the minimum number of rectangles for all areas.

- Continuous Edge Gradient-Based Template Matching for Articulated Objects.

VISAPP 2009 (International Conference on Computer Vision Theory and Applications), Lisbon, Portugal, February 2009

- Segmentation of Distinct Homogeneous Color Regions in Images.

CAIP 2007 (The 12th International Conference on Computer Analysis of Images and Patterns), Vienna University of Technology, August 2007

- More publications can be found on our

list of publications.

Position Papers

Results

Segmentation-Free, Area-Based Articulated Object Tracking

Videos

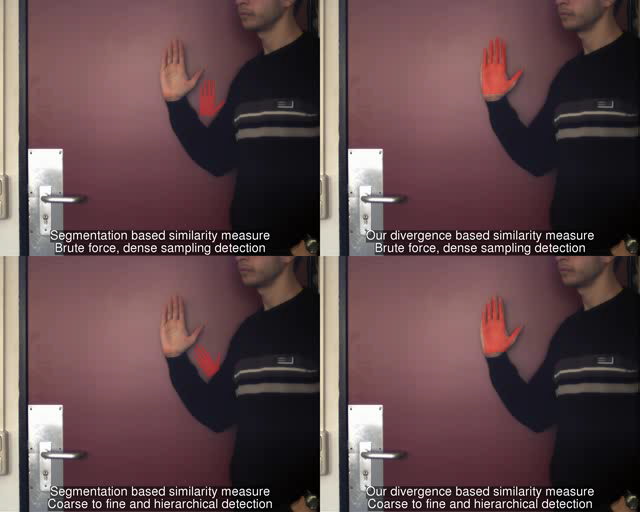

The video shows a visual comparison of a conventional skin segmentation-based similarity computation (left column) with

our novel divergence-based (right row). Both similarity measures are combined with a brute force (upper row) and

our new smart mode finding approach (bottom row) to detect the best matching position and hand pose.

The resuls show that the divergence-based approach outperforms the conventional approach in situations where the

skin segmentation does not work well.

FAST: Fast Adaptive Silhouette Area based Template Matching

Videos

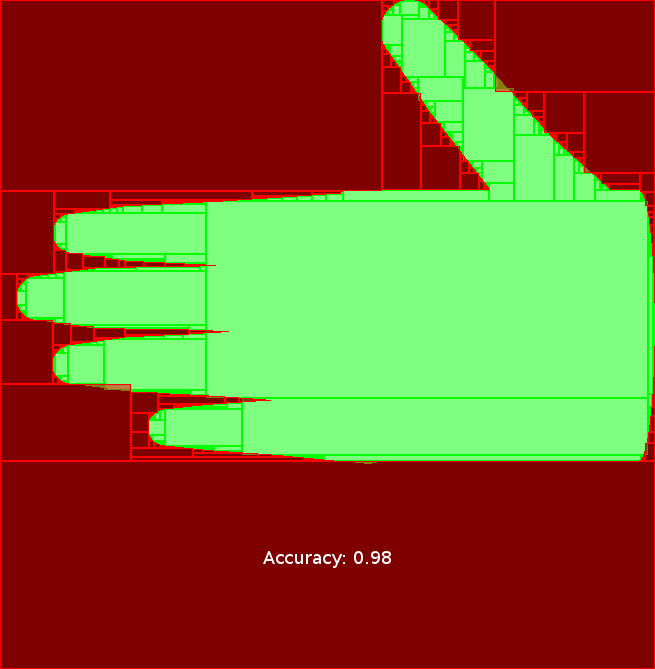

The left most video demonstrates the rectangle covering computation algorithm.

The middle and right videos demonstrate the matching approach. Of most interest is the

bottom left panel that shows the rectangular representation of the templates.

We would like to point out that, at matching time, we can adaptively choose the matching accuracy

by simply adjusting the number of rectangles per template used to compute the similarity.

Silhouette Area Based Similarity Measure for Template Matching in Constant Time

Videos

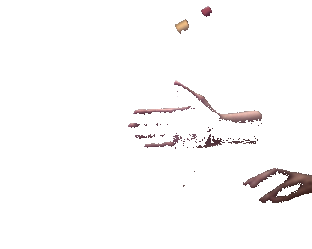

The video demonstrates the main steps of our area based similarity measure.

The skin segmentation is applied to the input image. As result, we get the skin likelihood map shown on the top right panel.

The templates are represented by a very compact data structure consisting of a set of rectangles (bottom left panel).

We compute a similarity measure based on the per-pixel joint probability. Utilizing the integral image of the log-likelihood,

we are able to compute the similarity measure more then 10 times faster compared to state-of-the-art approaches.

Continuous Edge Gradient-Based Template Matching

Videos

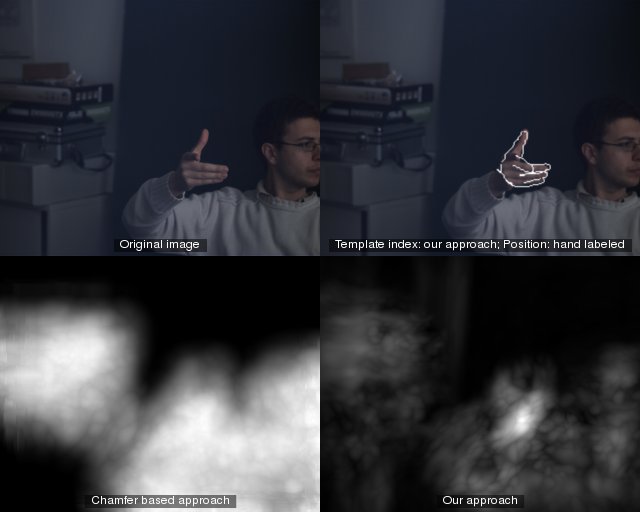

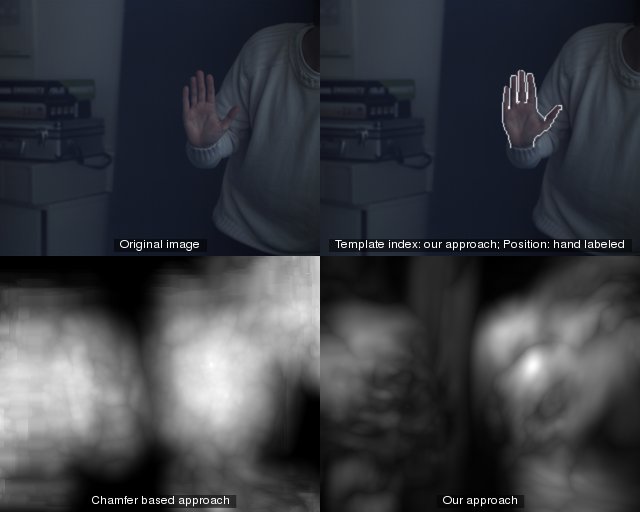

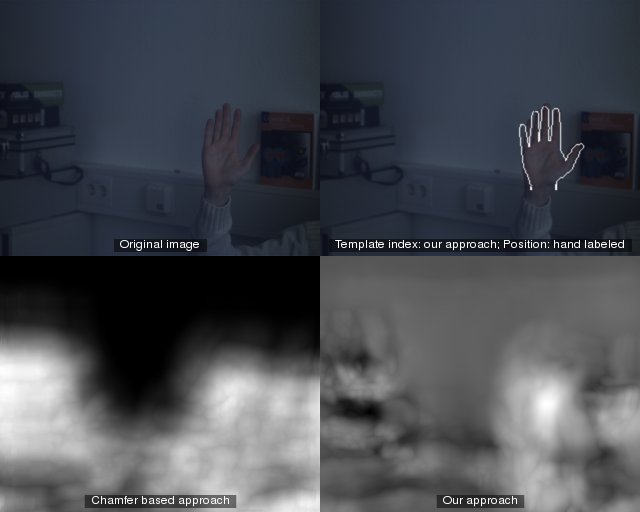

| Original image |

Best matching template determined by our approach superimposed at the hand labeled position |

| Combined confidence map generated by the chamfer based approach |

Combined confidence map generated by our approach |

|

|

|

|

|

The videos demonstrate that our approach generates fewer and much more significant

maxima (possible hand positions), which leads to considerably easier true hand position finding.

Note:

- At each frame, the values in the combined confidence maps are scaled to the full gray scale range for better visualization.

- The reason for the homogeneous black regions in the confidence map, generated by the chamfer distance, is the edge pixel to edge pixel distance limit of 20. At each pixel in this black regions, the maximum distance is reached.

The videos demonstrate that our approach generates fewer and much more significant maxima (possible hand positions), which leads to considerably easier true hand position finding.

Skin Segmentation

Original Image |

Jones and Rehg |

Our Approach |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Video

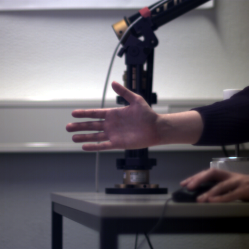

The Video below shows the segmentation algorithm. The Camera is positioned on the rear right side.

On the screen the left window shows the captured image from the camera, the right window the

segmentation result of our algorithm.